Zhuohuang Zhang (zhuozhan@iu.edu), Yong Xu, Meng Yu, Shi-xiong Zhang, Lianwu Chen, Donald S. Williamson, Dong Yu

Abstract:

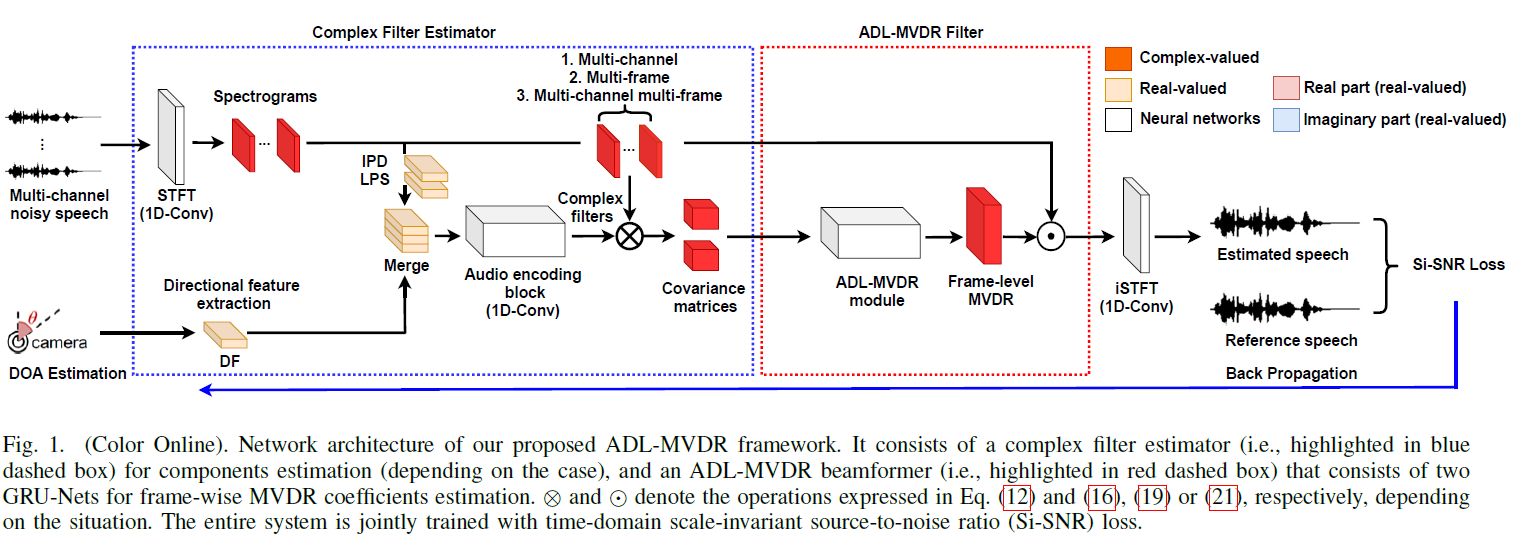

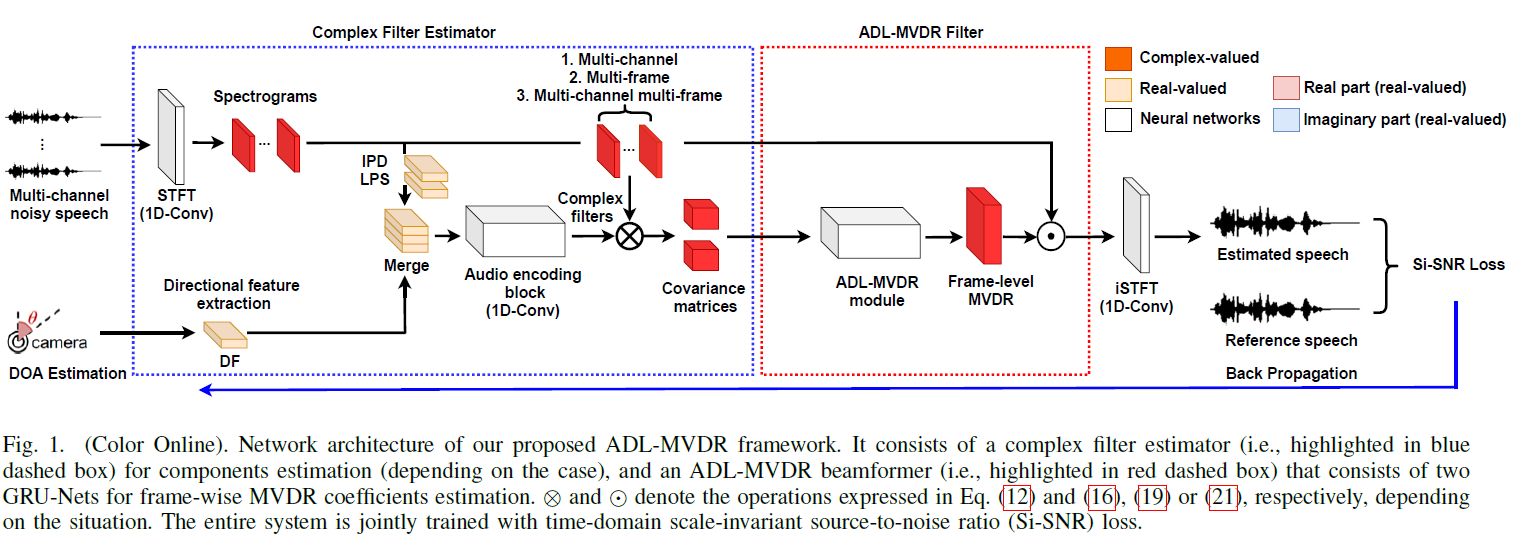

Many purely neural network based speech separation approaches have been proposed that greatly improve objective assessment scores, but they often introduce nonlinear distortions that are harmful to automatic speech recognition (ASR) [1]. Minimum variance distortionless response (MVDR) filters strive to remove nonlinear distortions, however, these approaches either are not optimal for removing residual (linear) noise [2,3], or they are unstable when used jointly with neural networks. In this study, we propose a multi-channel multi-frame (MCMF) all deep learning (ADL)-MVDR approach for target speech separation, which extends our preliminary multi-channel ADL-MVDR approach [4]. The MCMF ADL-MVDR handles different numbers of microphone channels in one framework, where it addresses linear and nonlinear distortions. Spatio-temporal cross correlations are also fully utilized in the proposed approach. The proposed system is evaluated using a Mandarin audio-visual corpora [5,6] and is compared with several state-of-the-art approaches. Experimental results demonstrate the superiority of our proposed framework under different scenarios and across several objective evaluation metrics, including ASR performance.

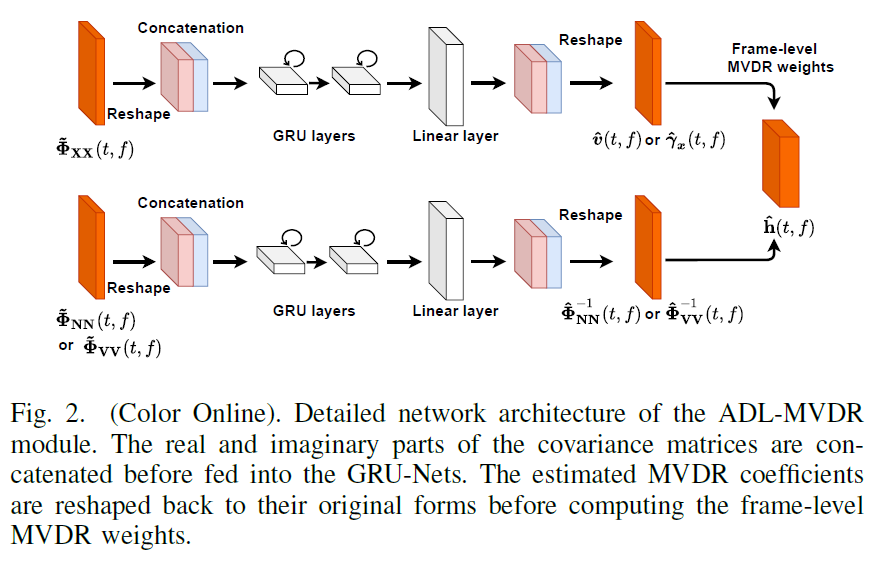

(Please refer to the paper for details).

(Please refer to the paper for details).

Systems evaluated:

1. NN with cRM: A Conv-TasNet variant [5,6] with complex ratio mask (denoted as cRM)

2. NN with cRF: A Conv-TasNet variant [5,6] with 3X3 size complex ratio filtering (denoted as cRF, please refer to the paper for details)

3. MVDR with cRM: An MVDR system with complex ratio mask [3]

4. MVDR with cRF: An MVDR system with 3X3 cRF

5. Multi-tap MVDR with cRM: A multi-tap MVDR system with complex ratio mask [3]

6. Multi-tap MVDR with cRM: A multi-tap MVDR system with 3X3 cRF

7. Proposed Multi-frame (MF) (size-2) ADL-MVDR with cRF: Our proposed MF ADL-MVDR system with 3X3 cRF and MF information from t-1 to t

8. Proposed MF (size-3) ADL-MVDR with cRF: Our proposed MF ADL-MVDR system with 3X3 cRF and MF information from t-1 to t+1

9. Proposed MF (size-5) ADL-MVDR with cRF: Our proposed MF ADL-MVDR system with 3X3 cRF and MF information from t-2 to t+2

10. Proposed Multi-channel (MC) ADL-MVDR with cRF: Our proposed MC ADL-MVDR system with 3X3 cRF

11. Proposed Multi-channel Multi-frame (MCMF) ADL-MVDR with cRF: Our proposed MCMF ADL-MVDR system with 3-channel 3-frame

12. Proposed MCMF ADL-MVDR with cRF: Our proposed MCMF ADL-MVDR system with 9-channel 3-frame

Wearing a headphone is strongly recommended for the best listening experience.

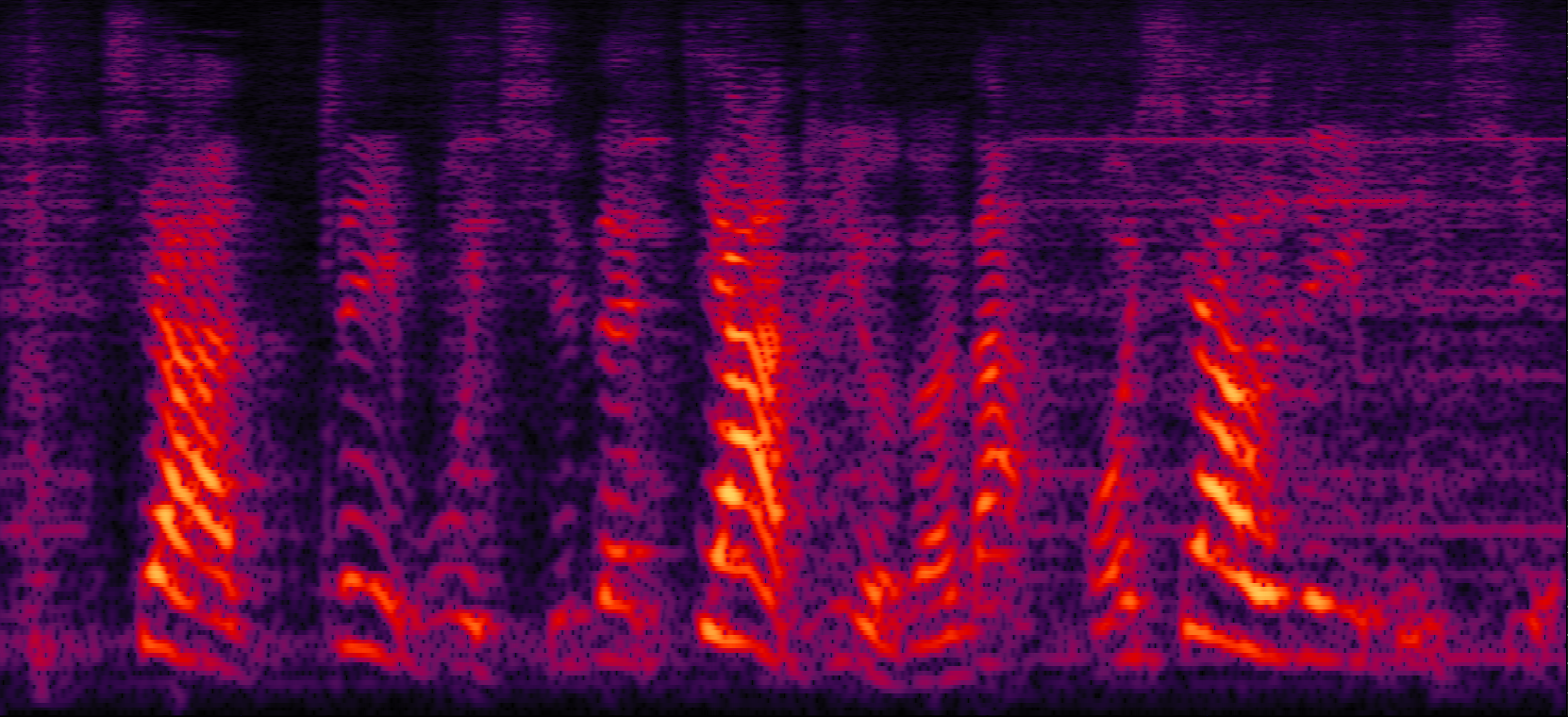

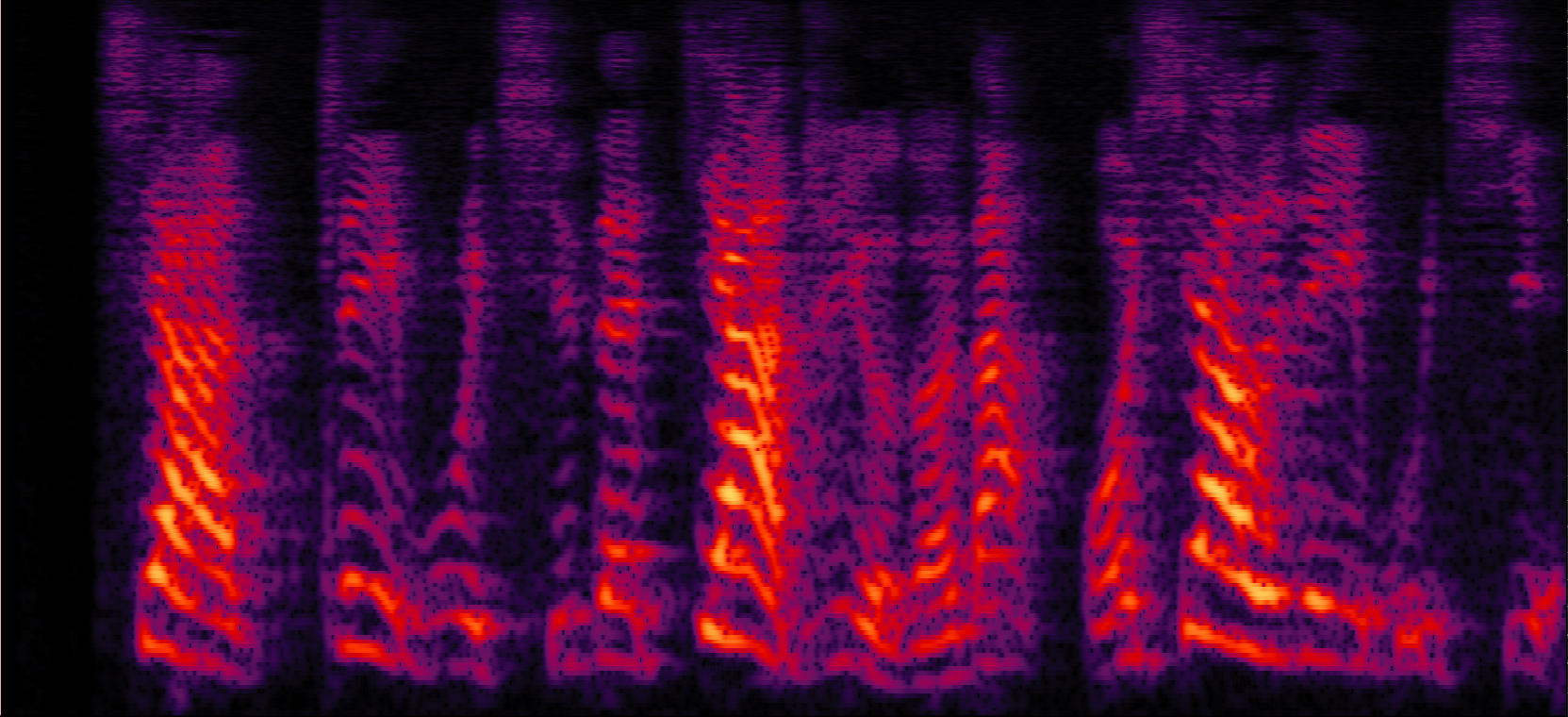

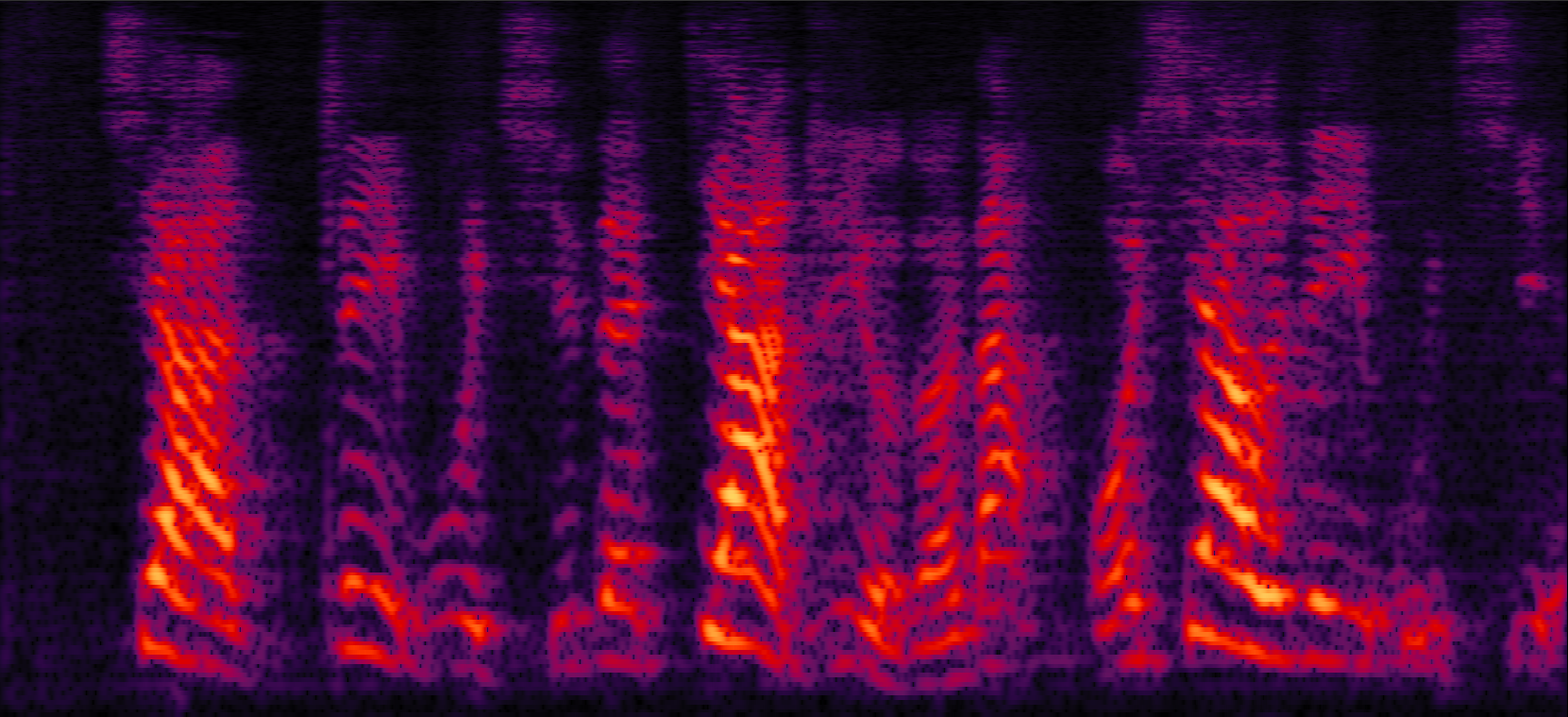

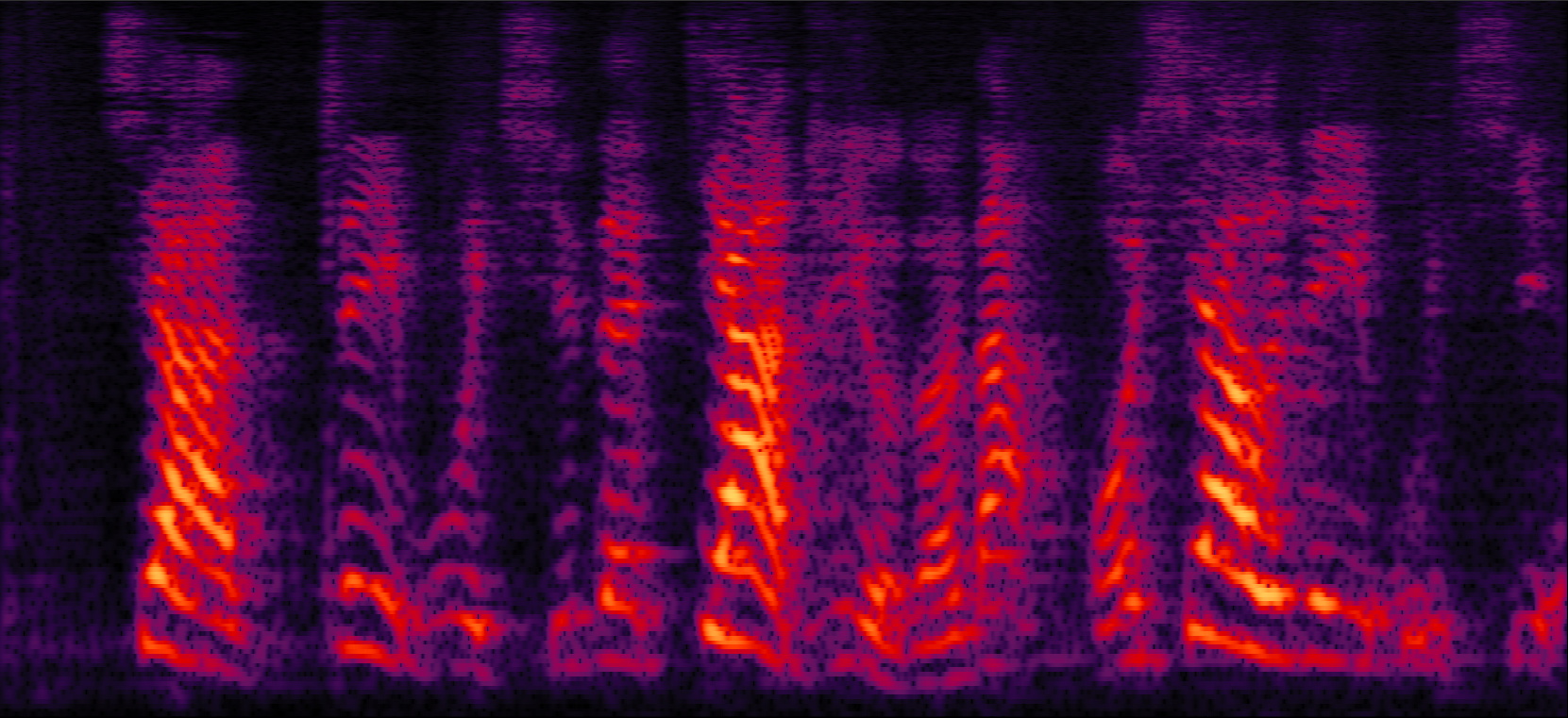

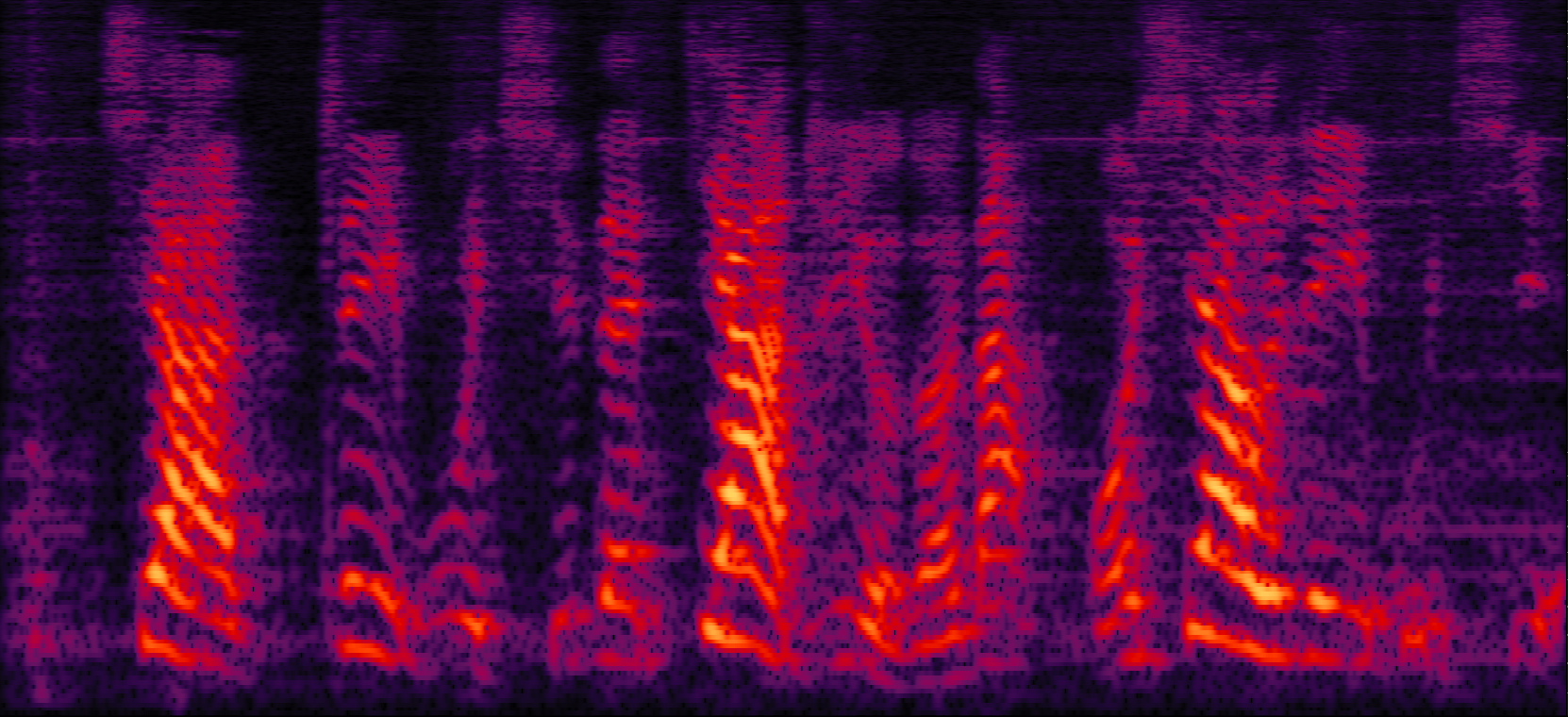

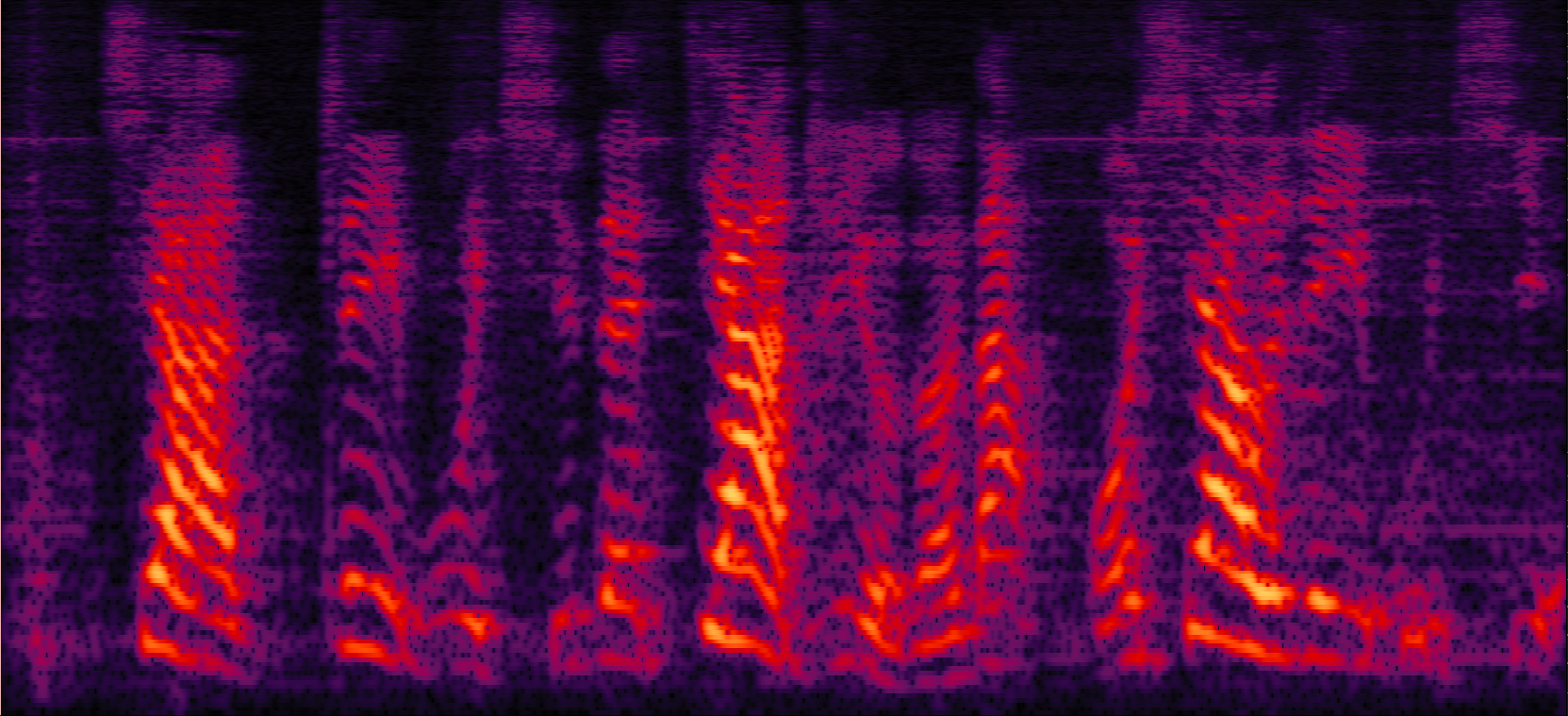

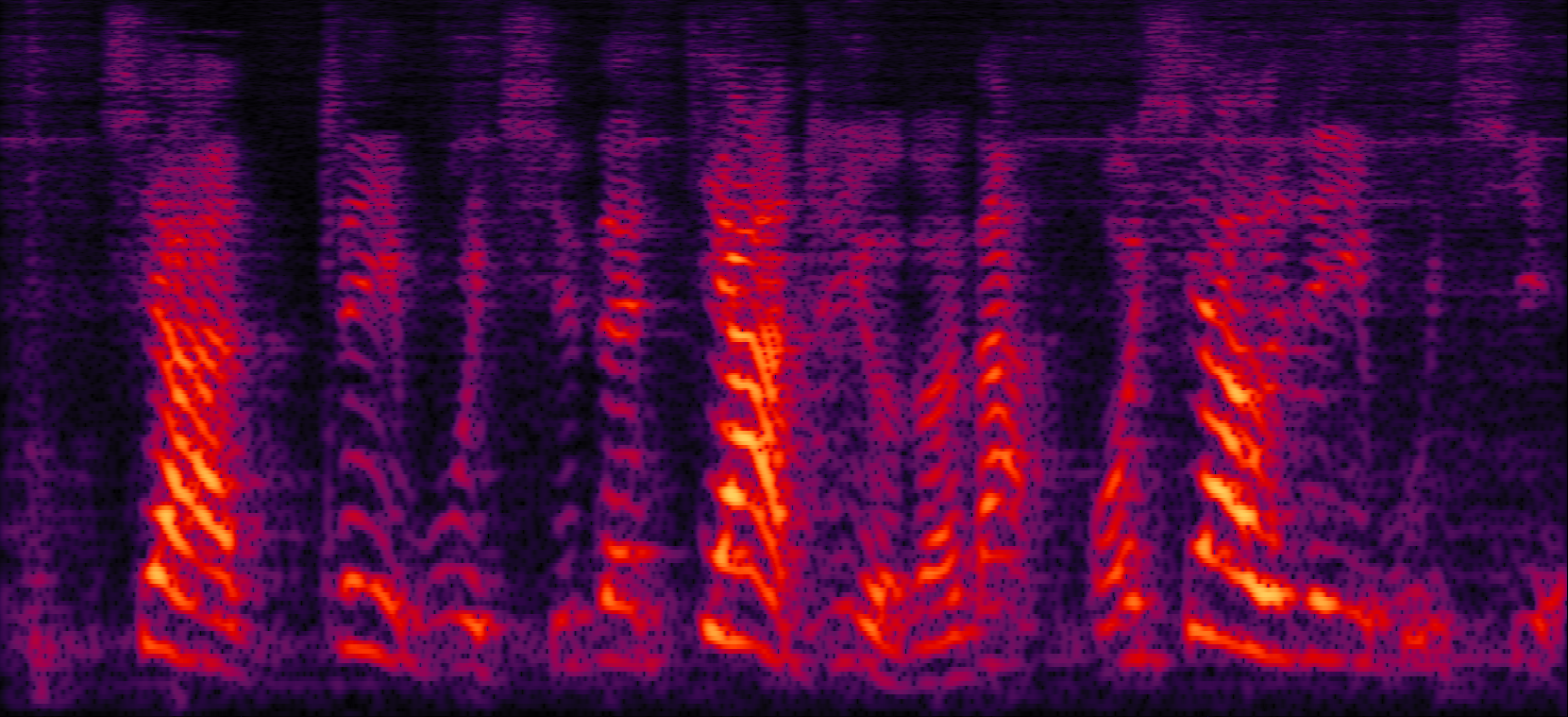

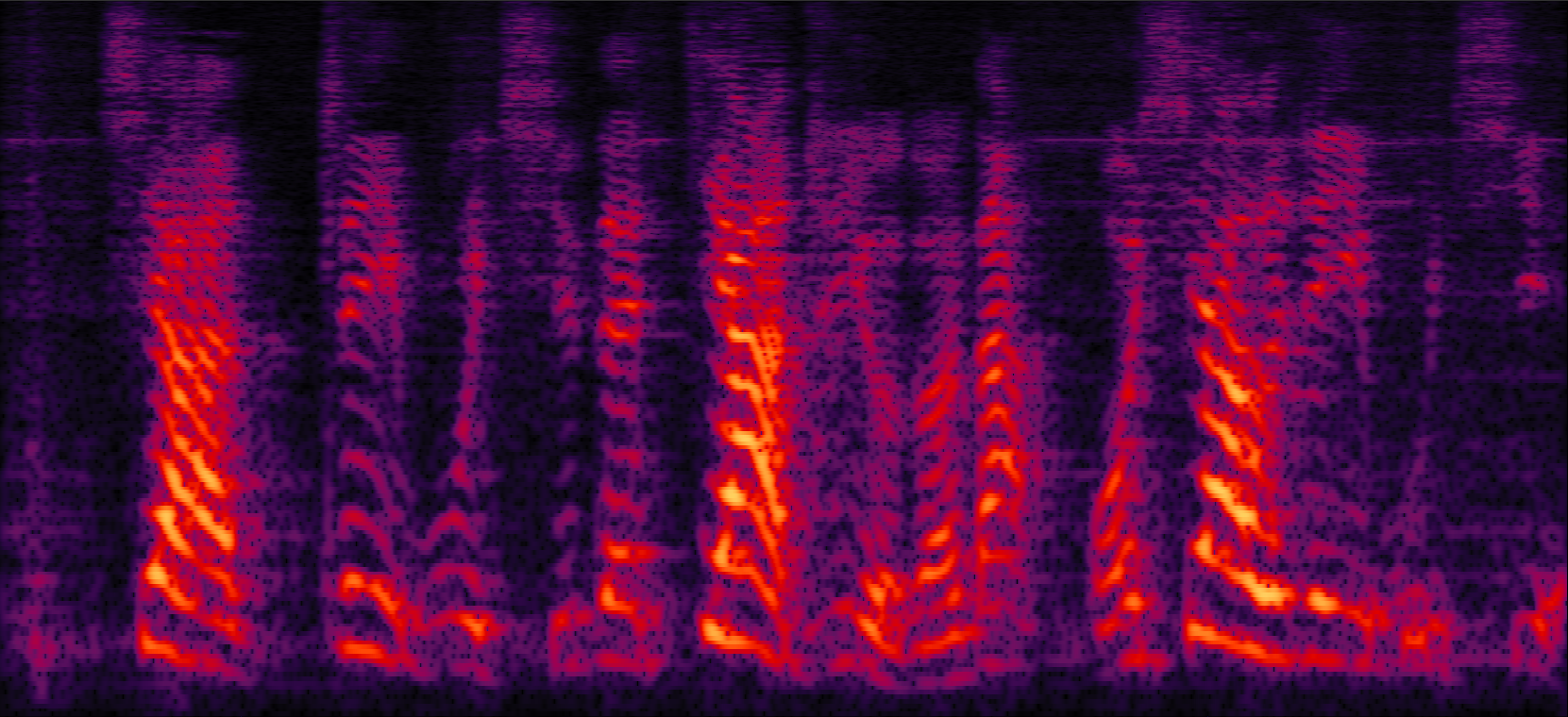

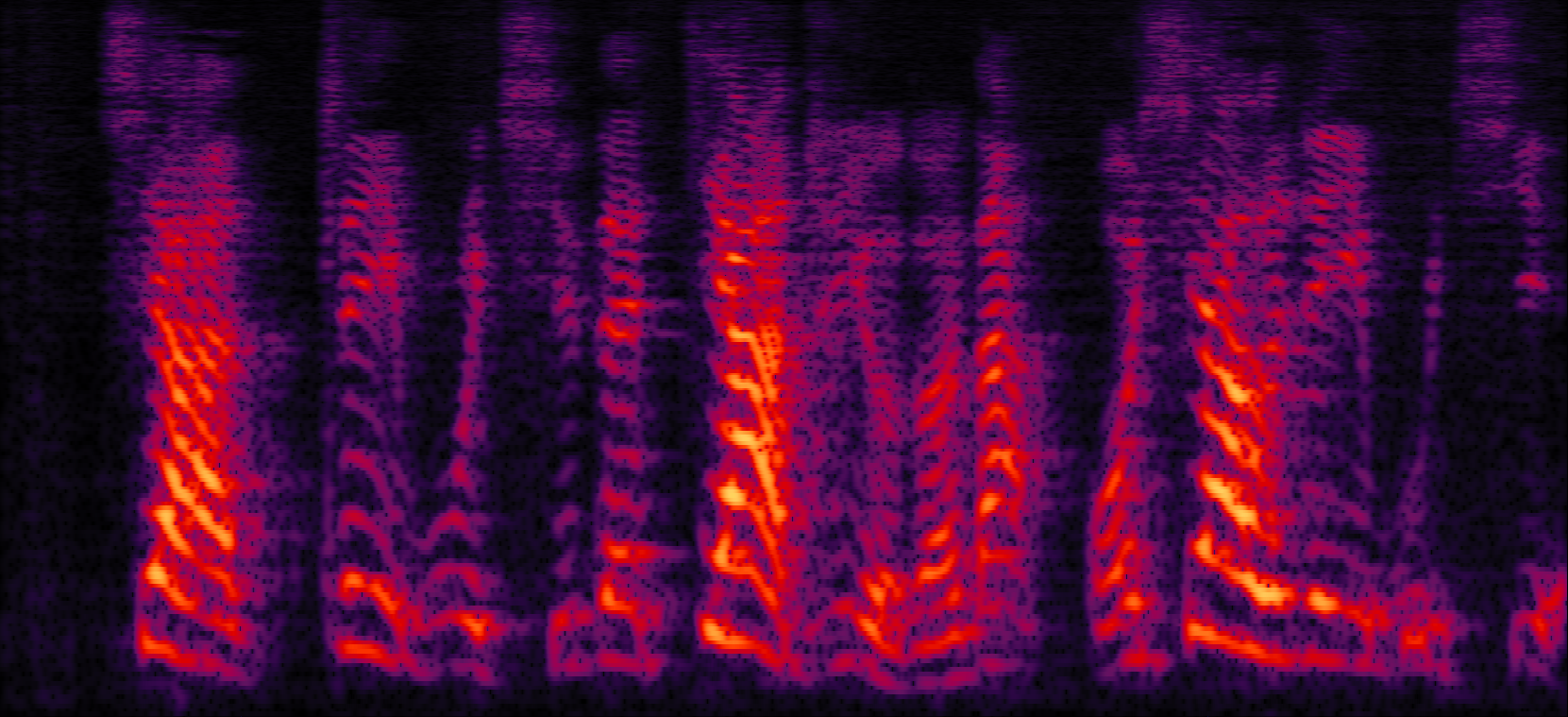

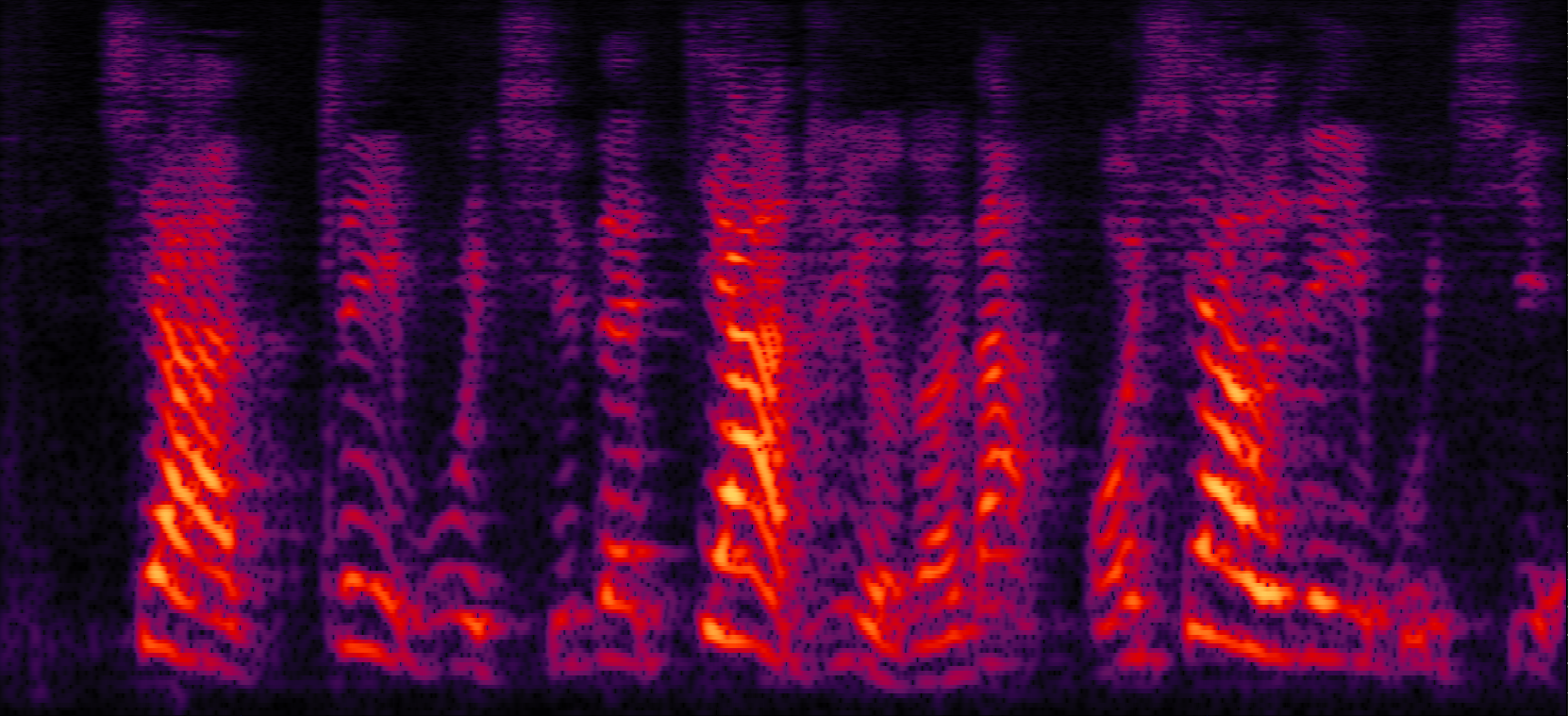

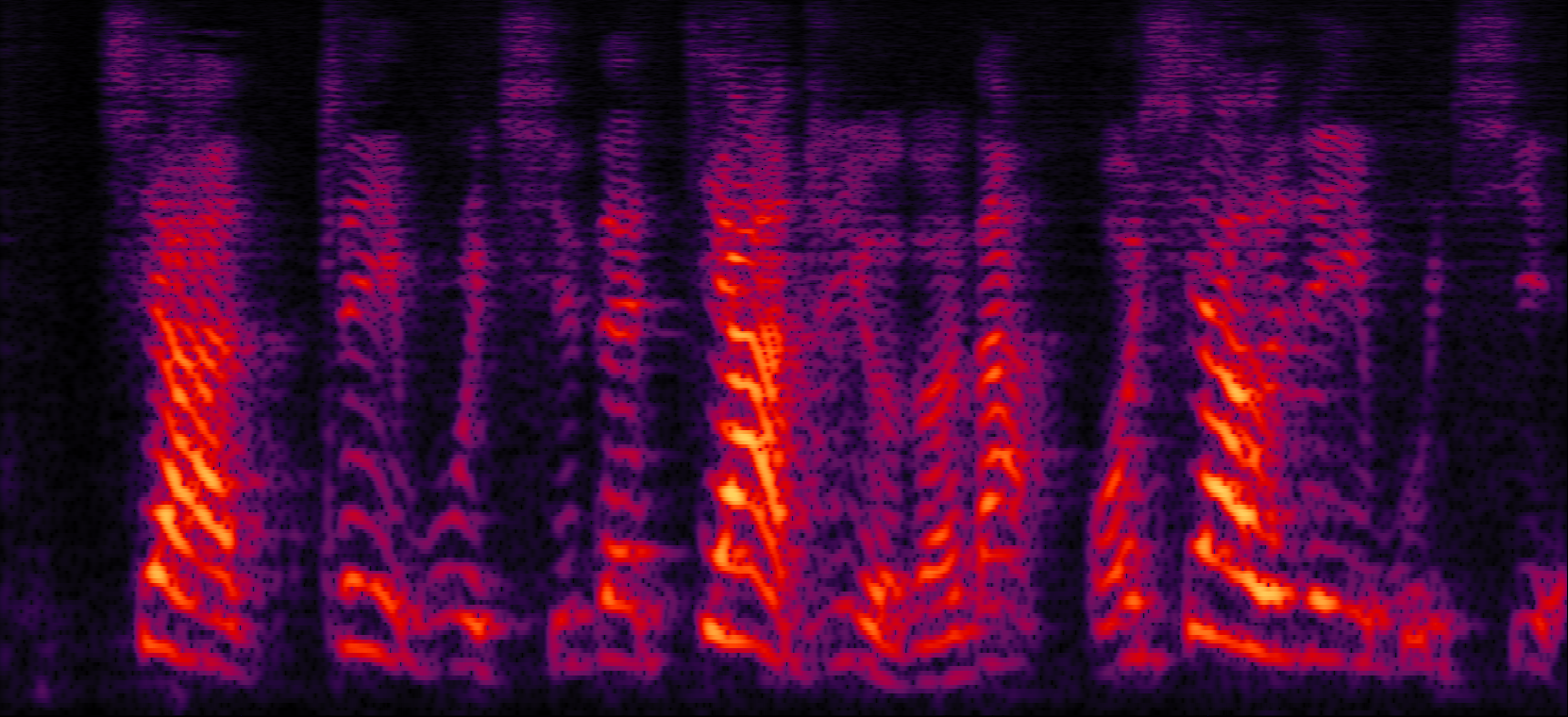

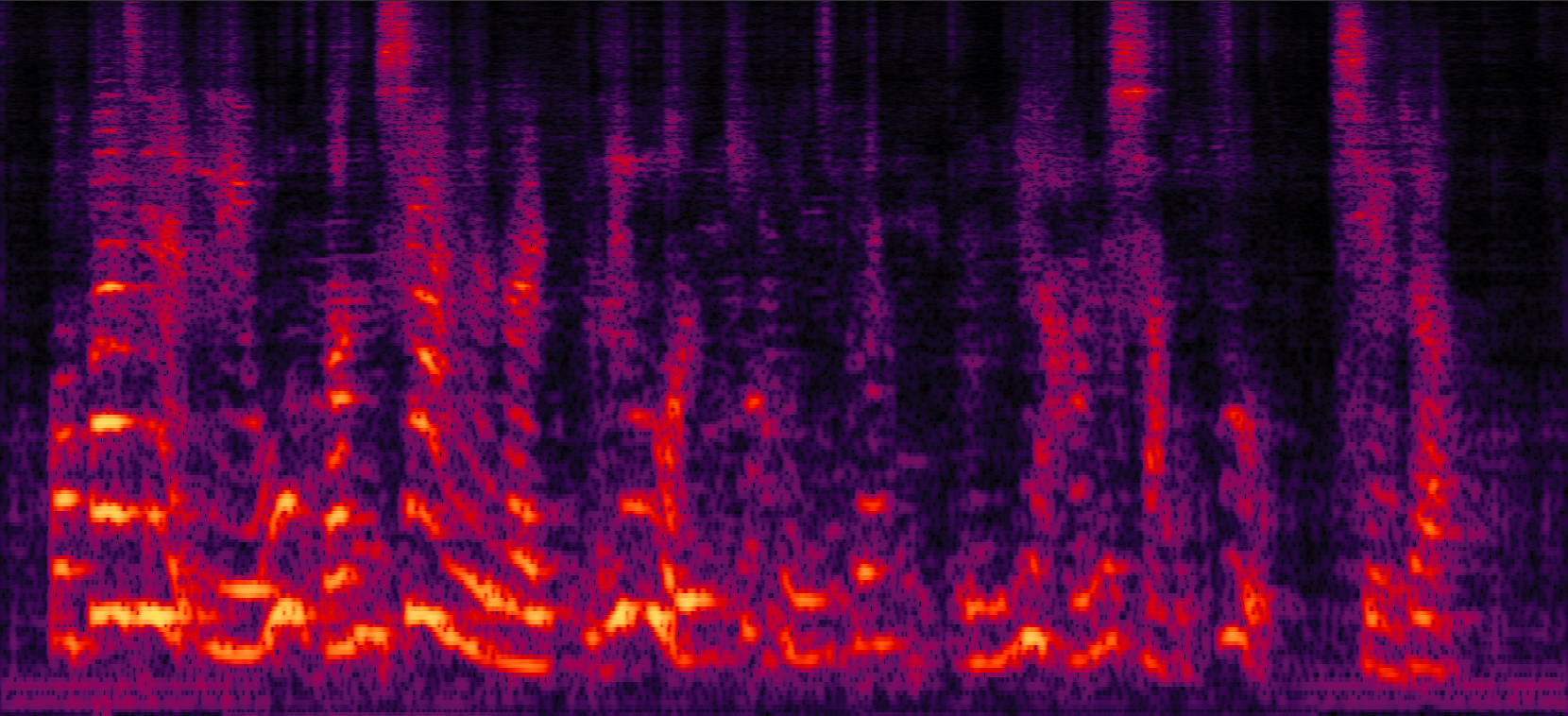

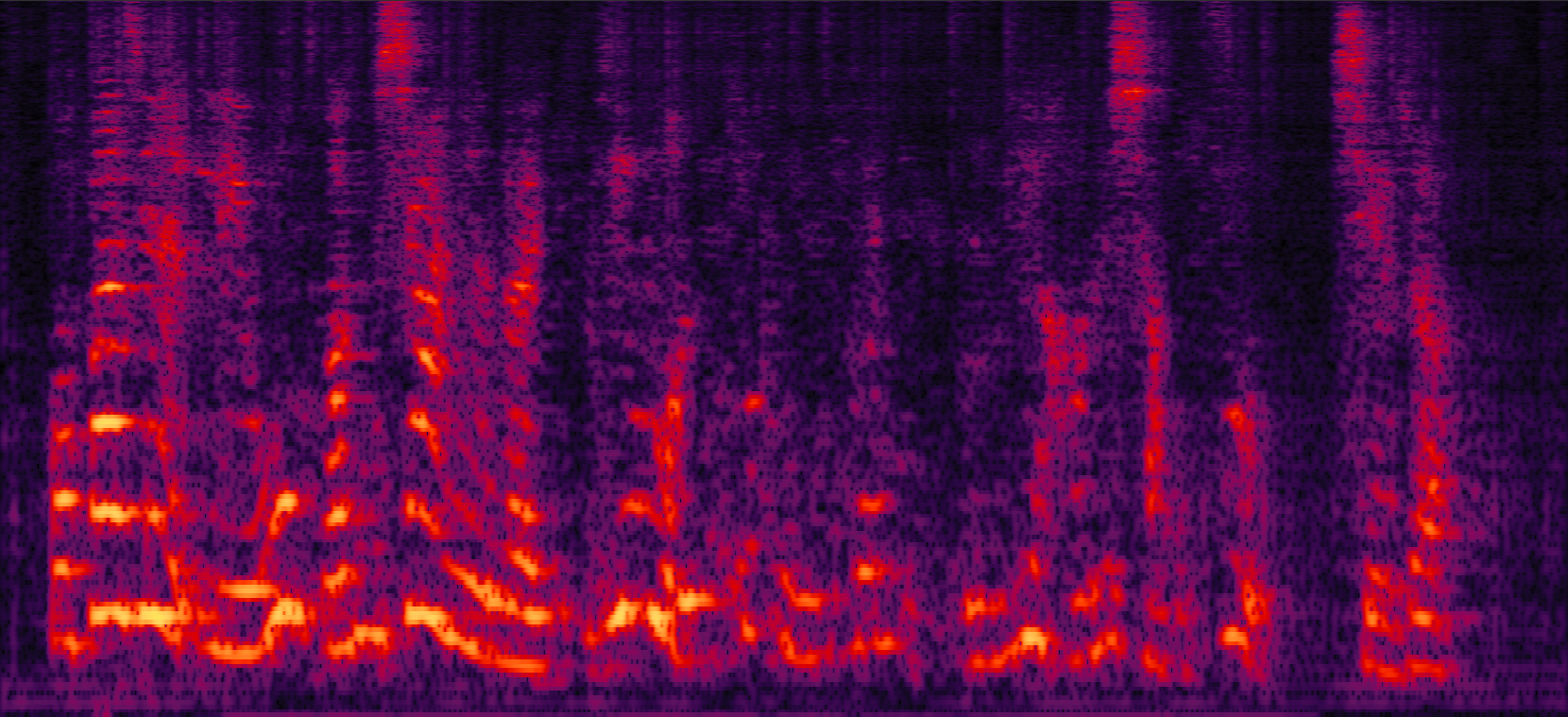

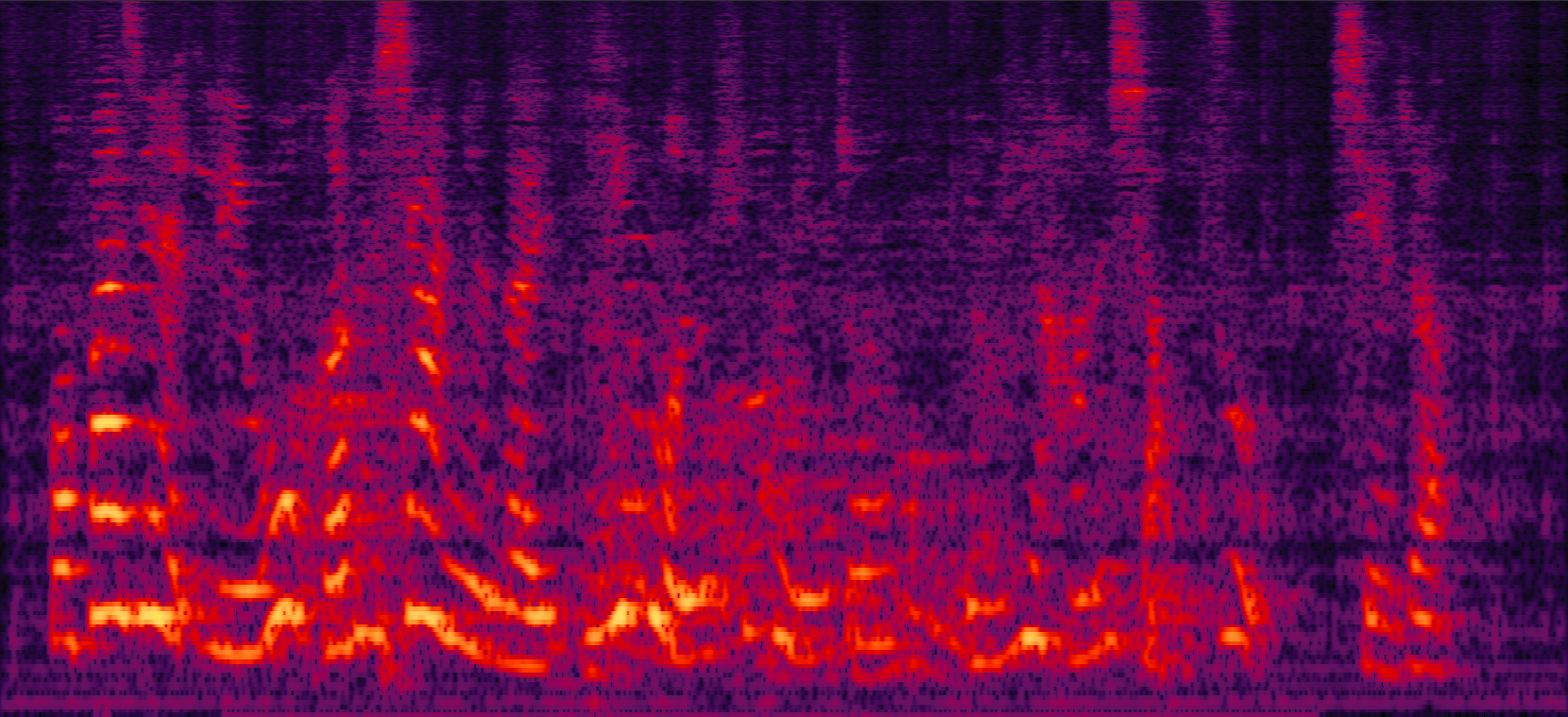

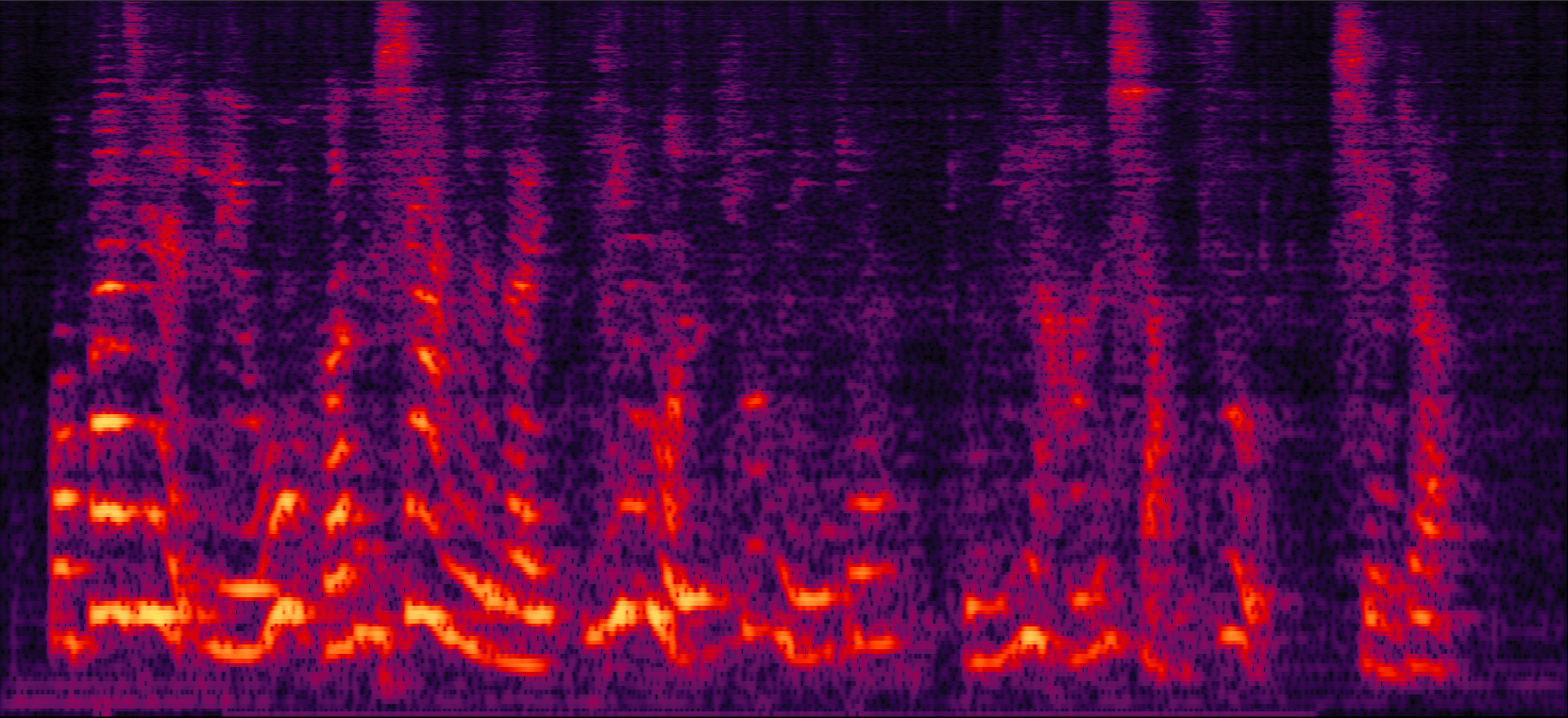

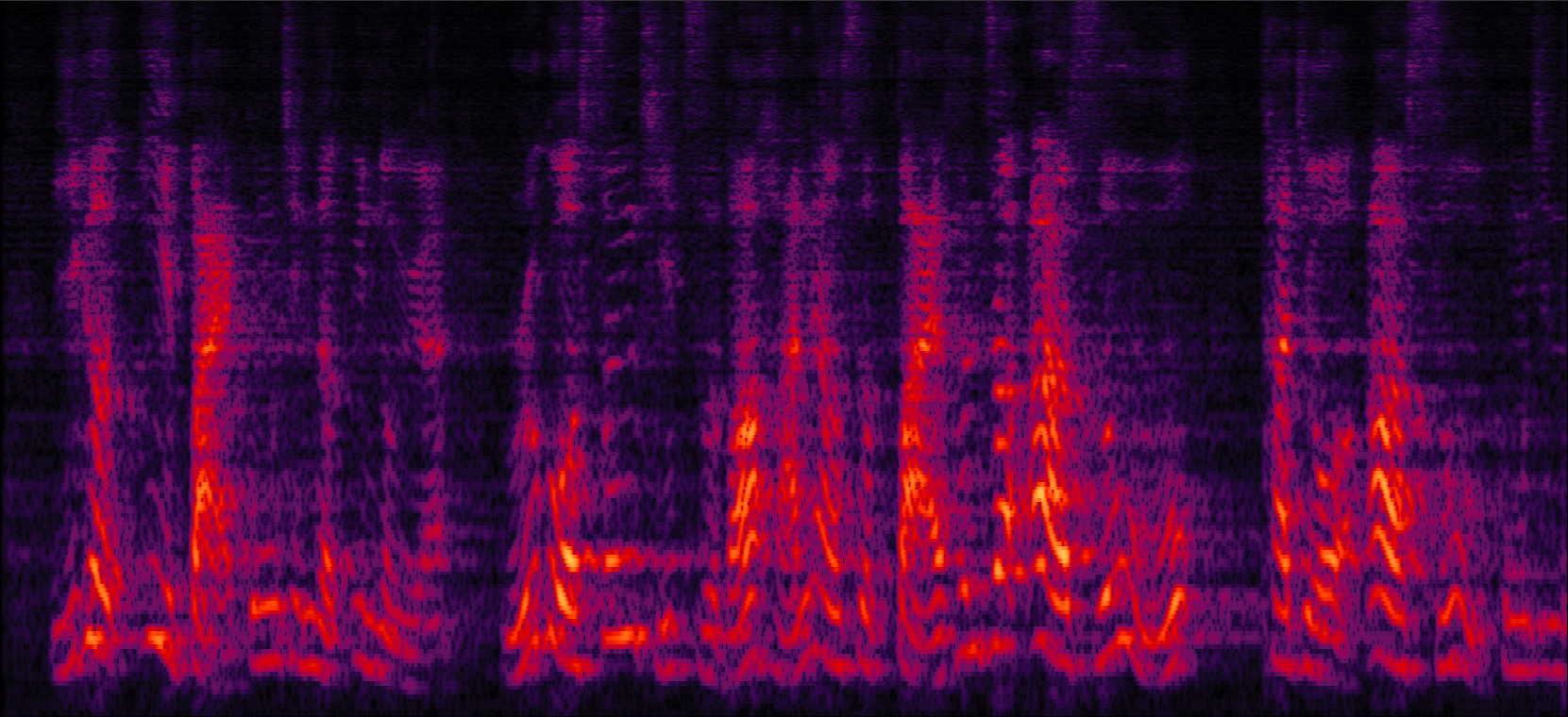

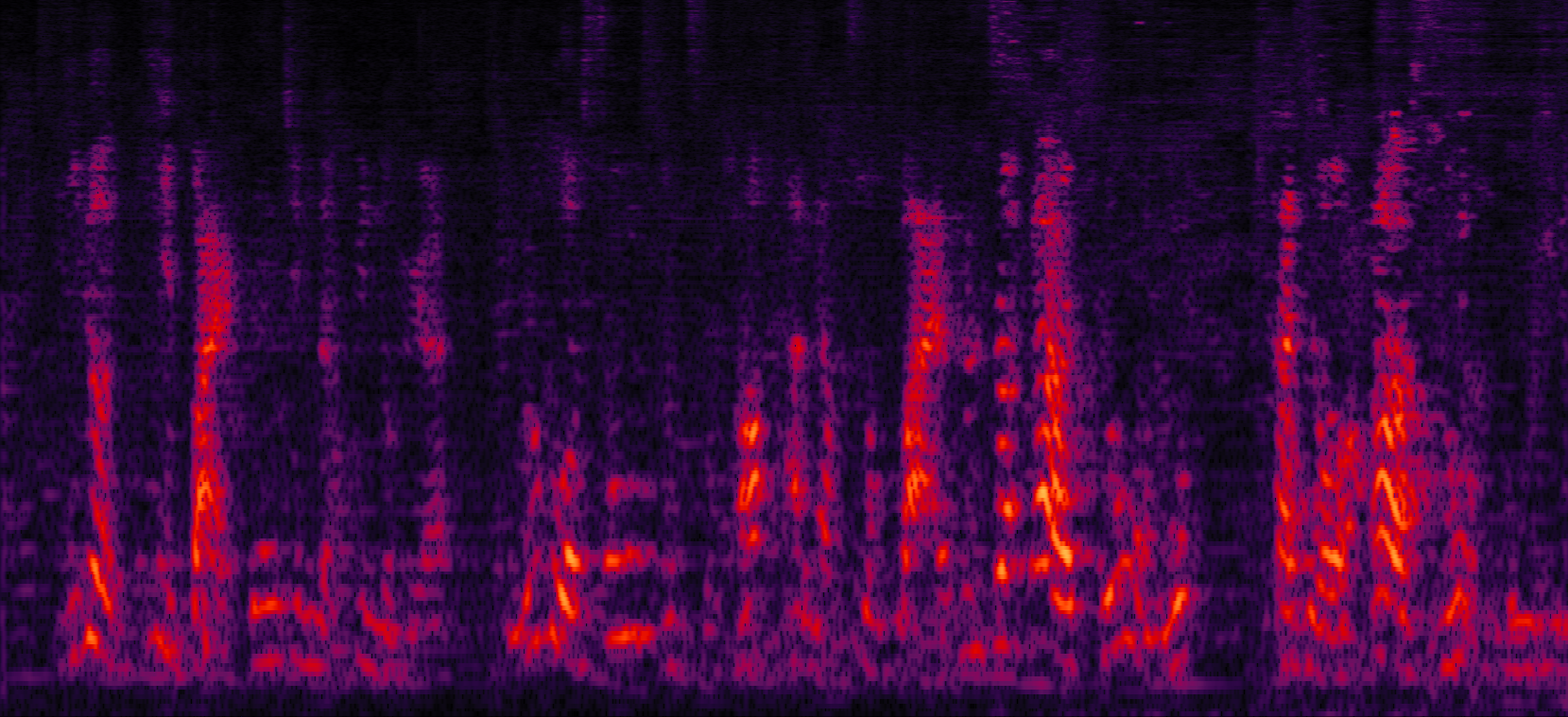

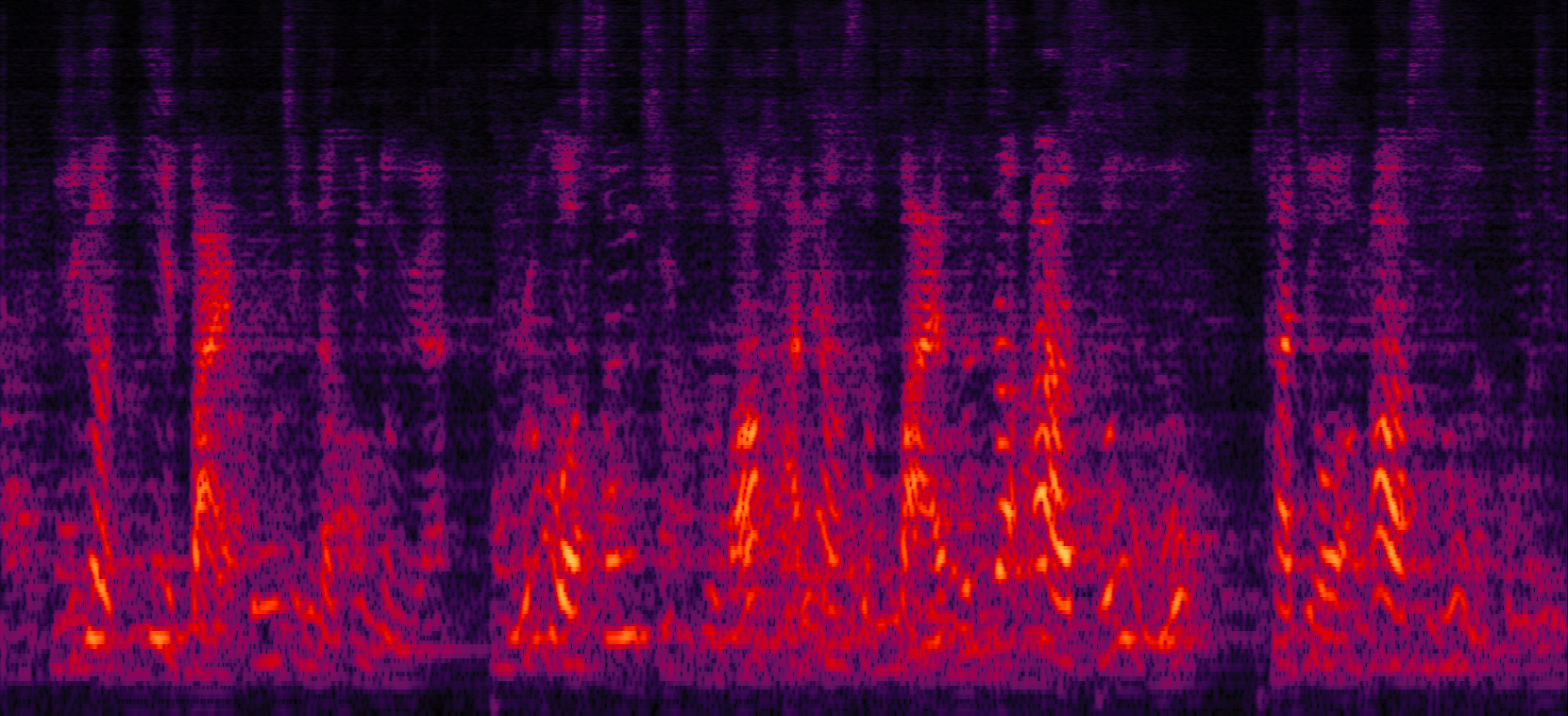

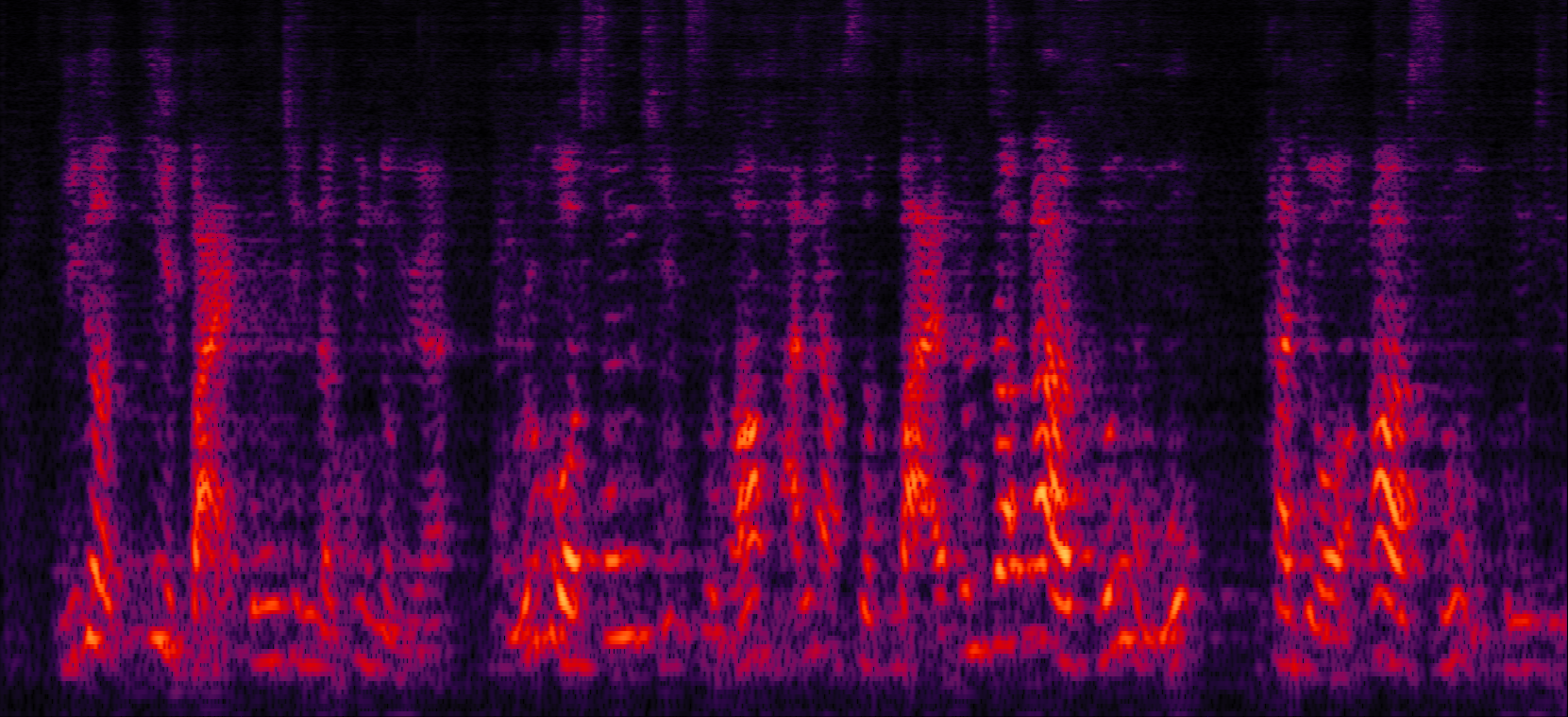

Purely NN systems can greatly remove the residual noise, but cause nonlinear distortion (e.g., blackhole on spectrogram).

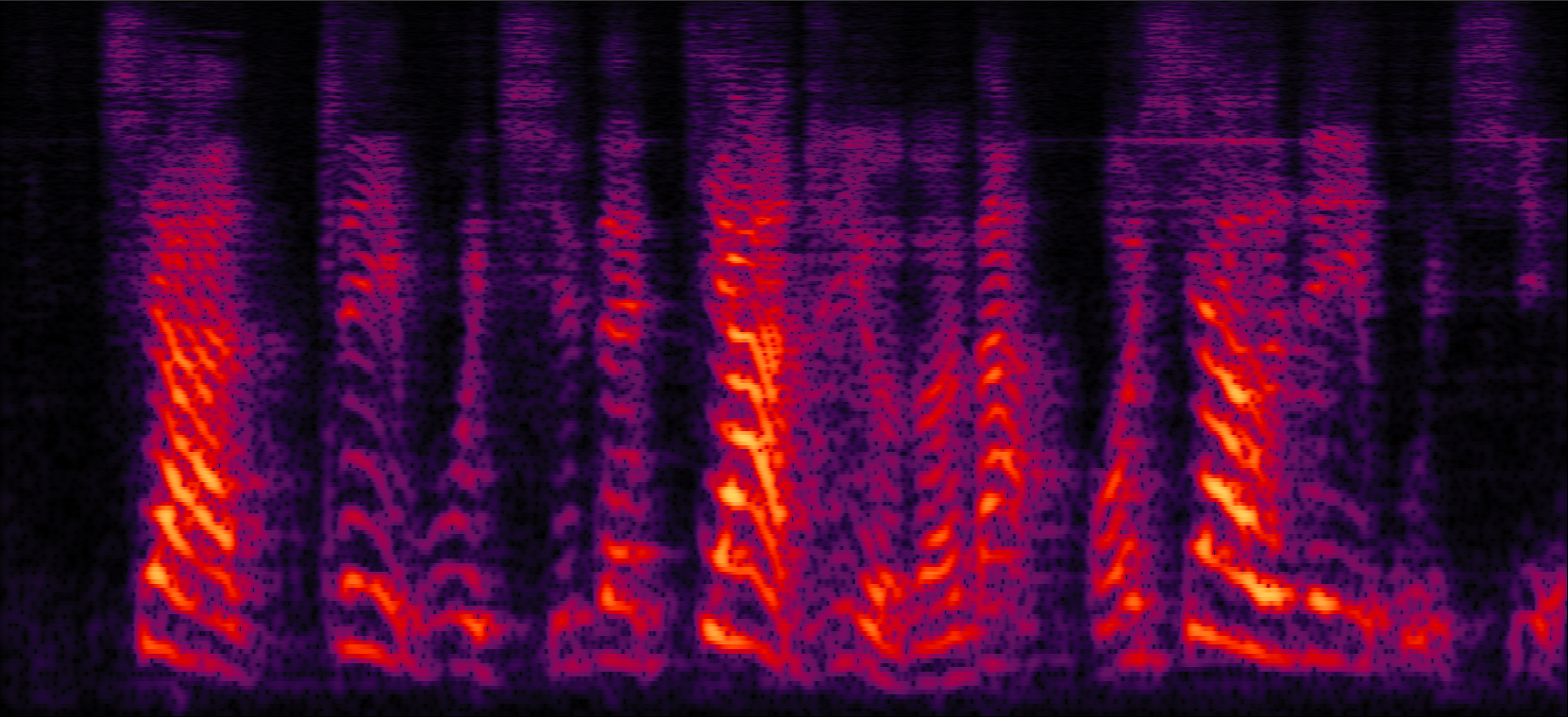

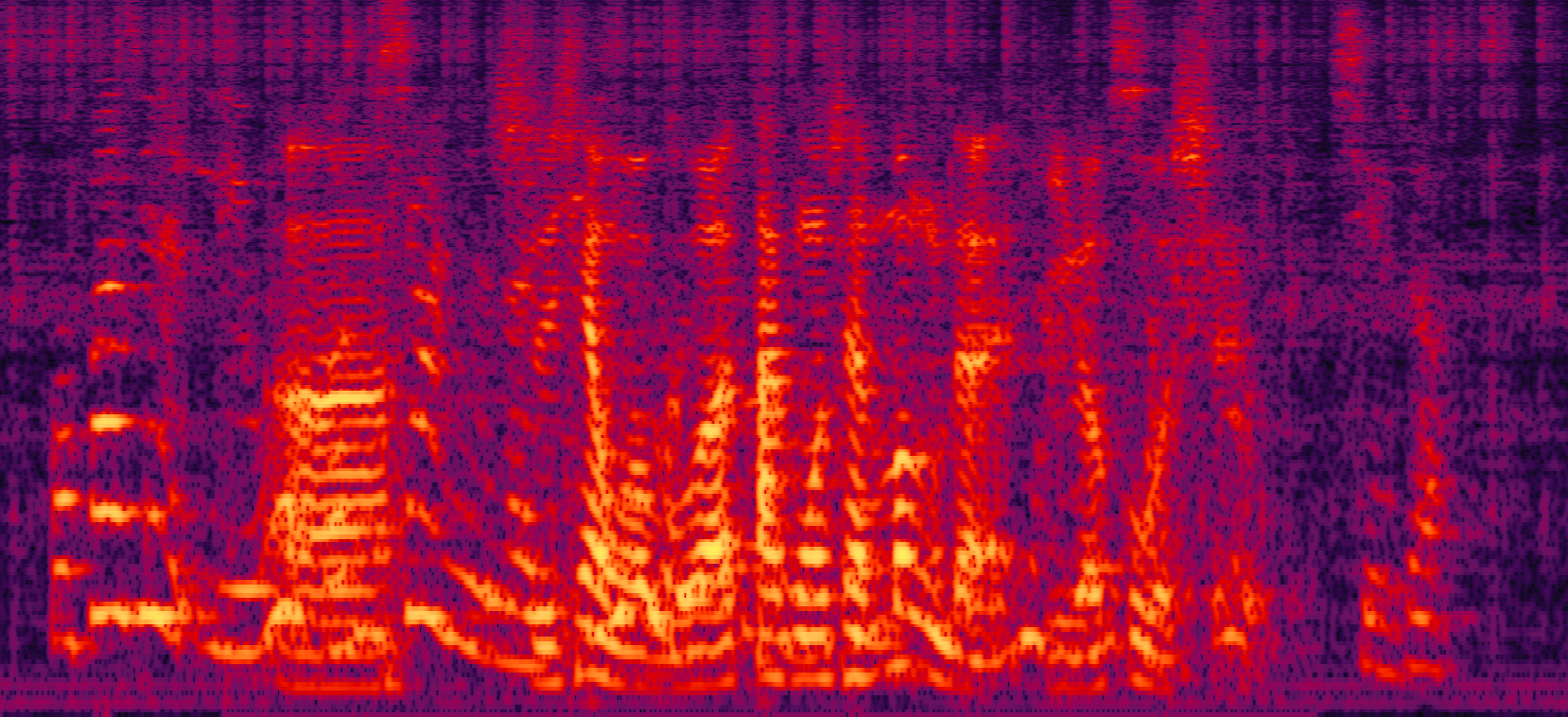

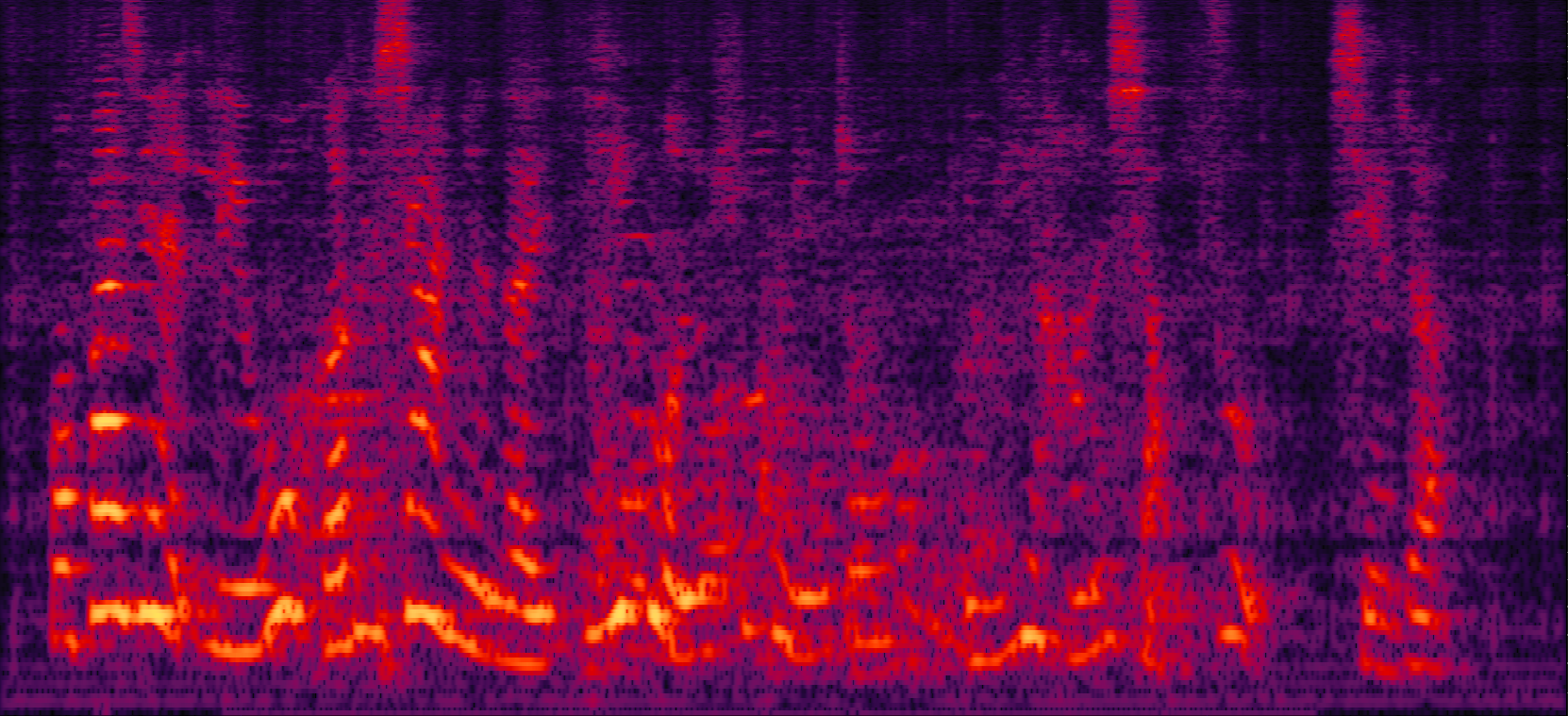

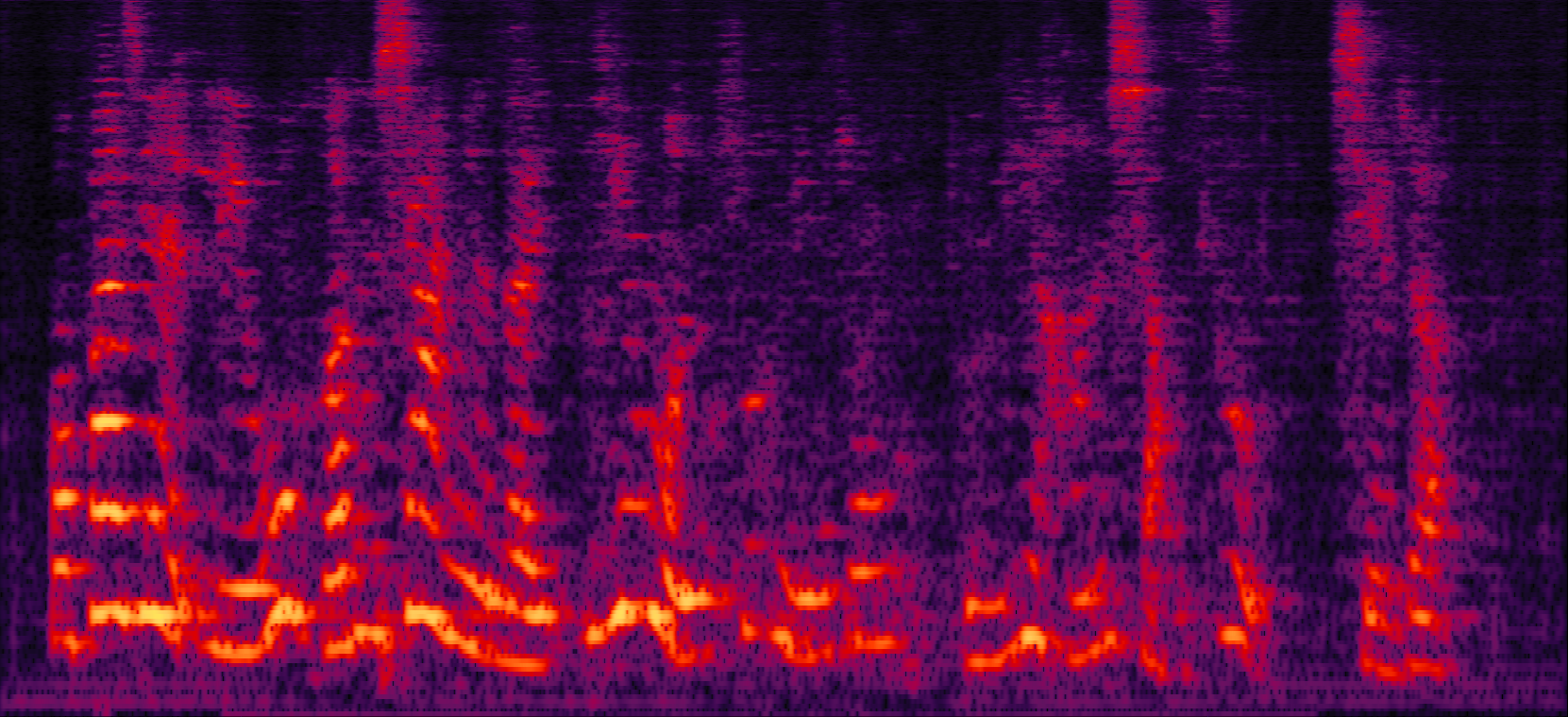

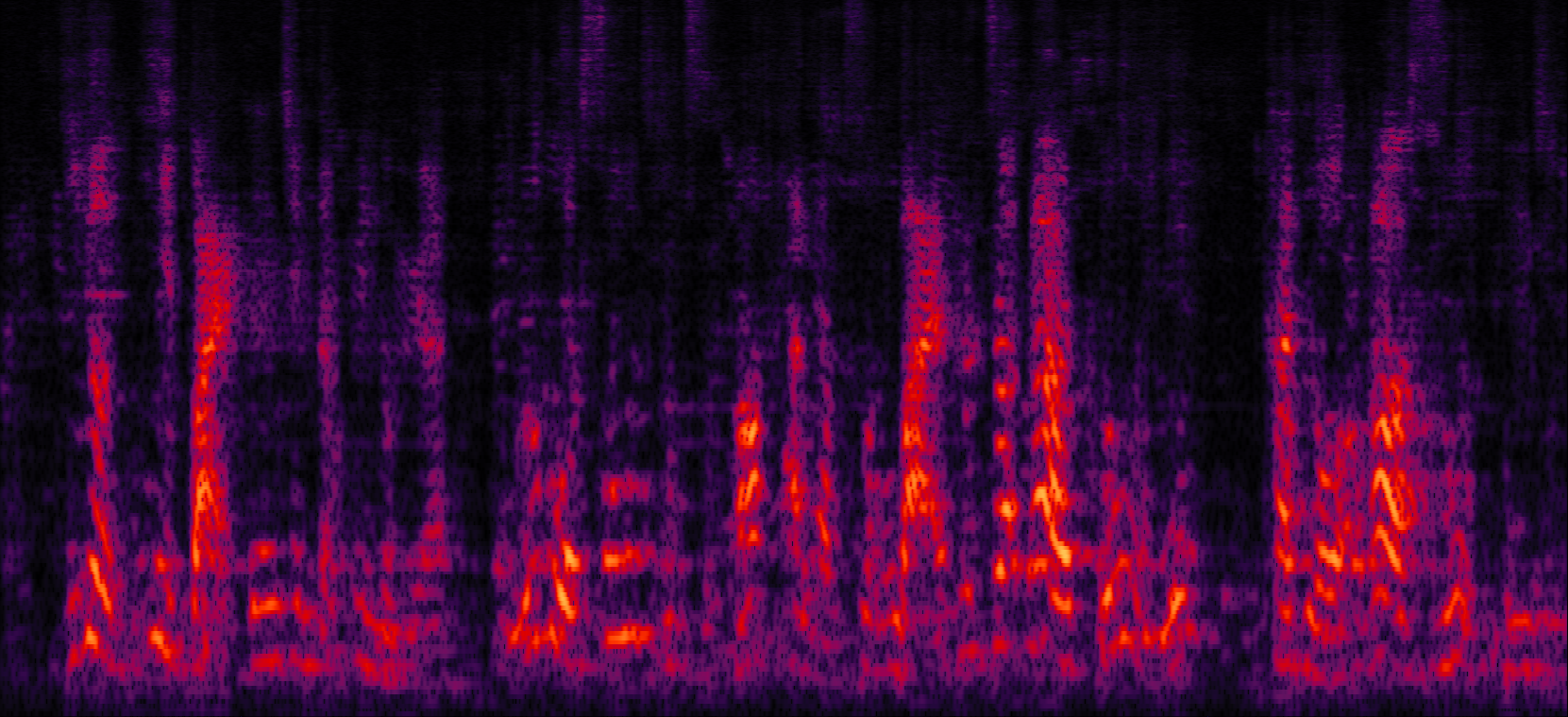

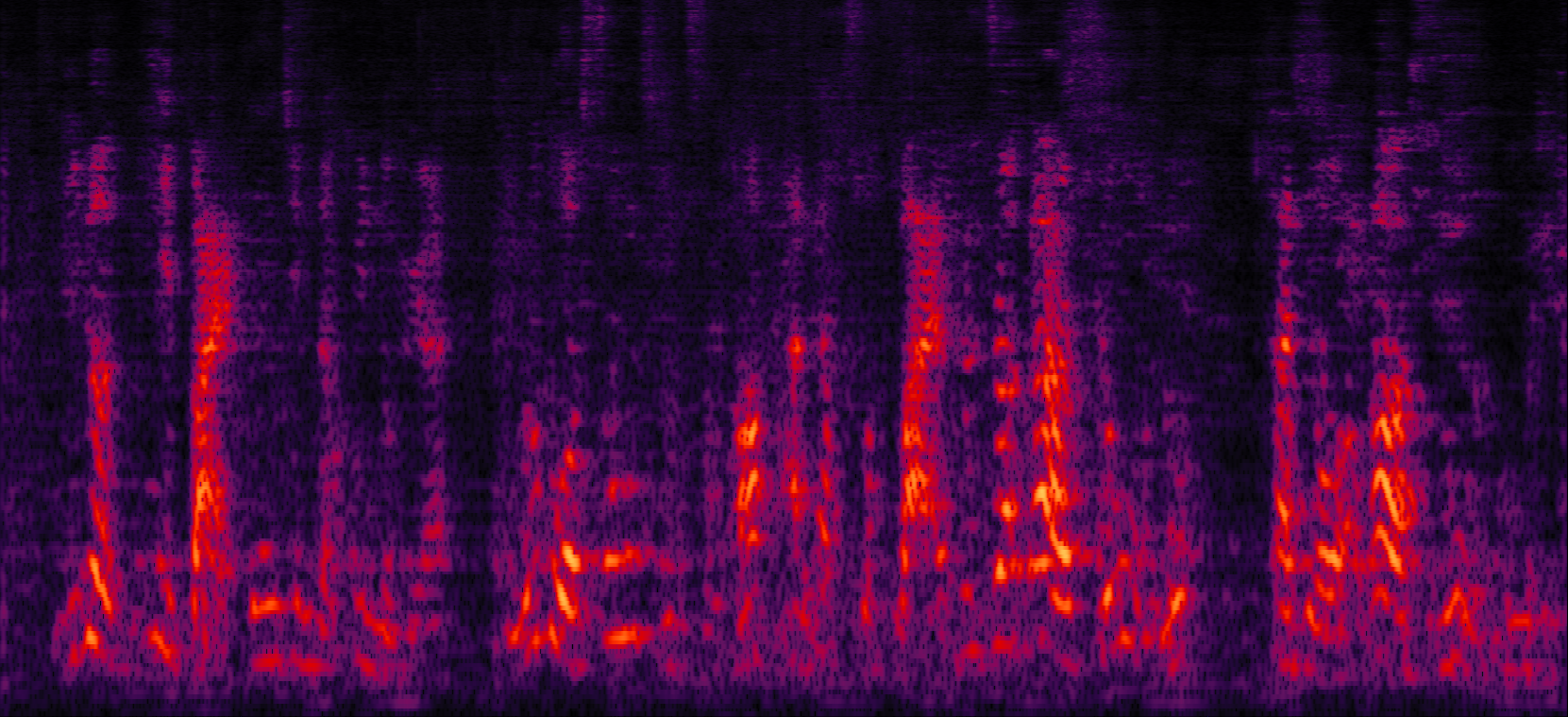

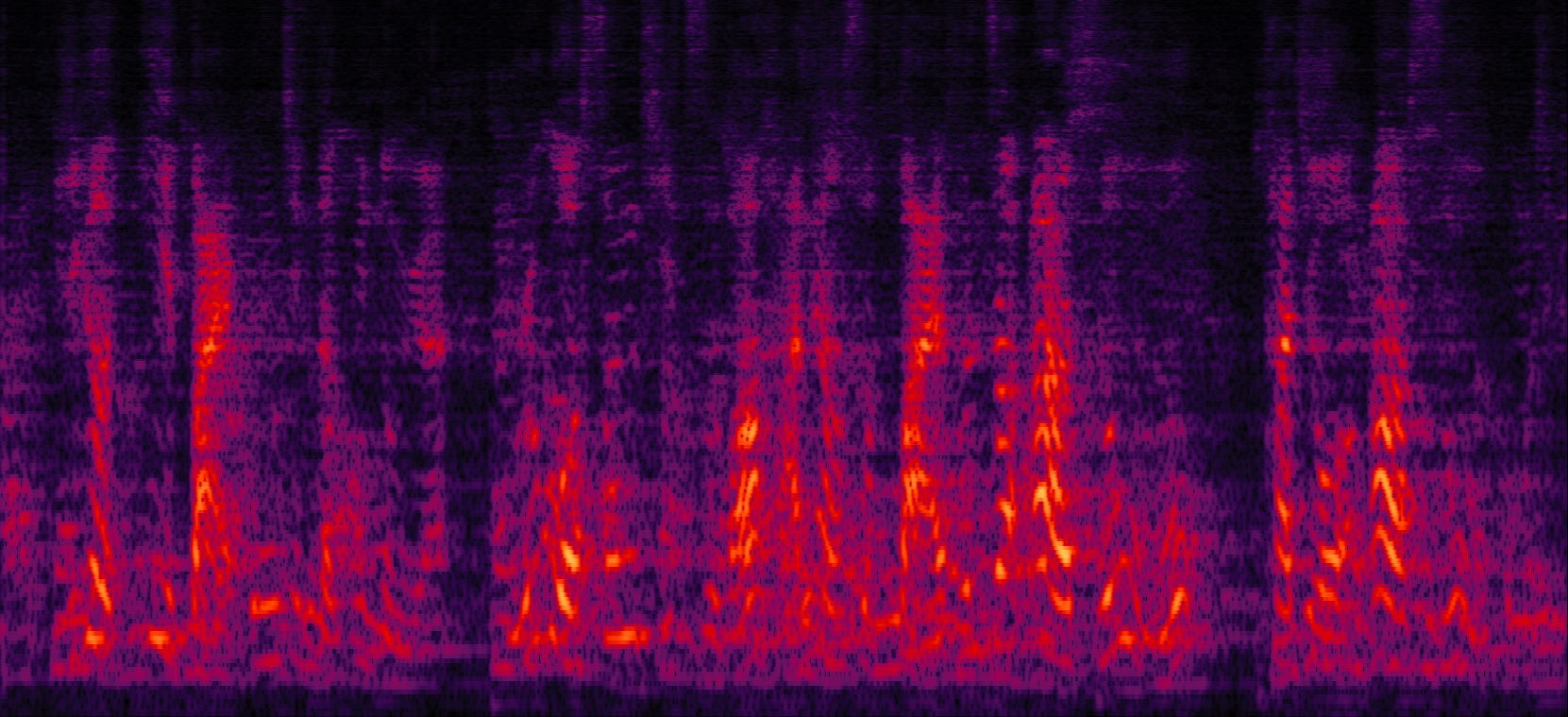

Conventional MVDR systems can introduce fewer distortions to the target speech, but result in high level of residual noise.

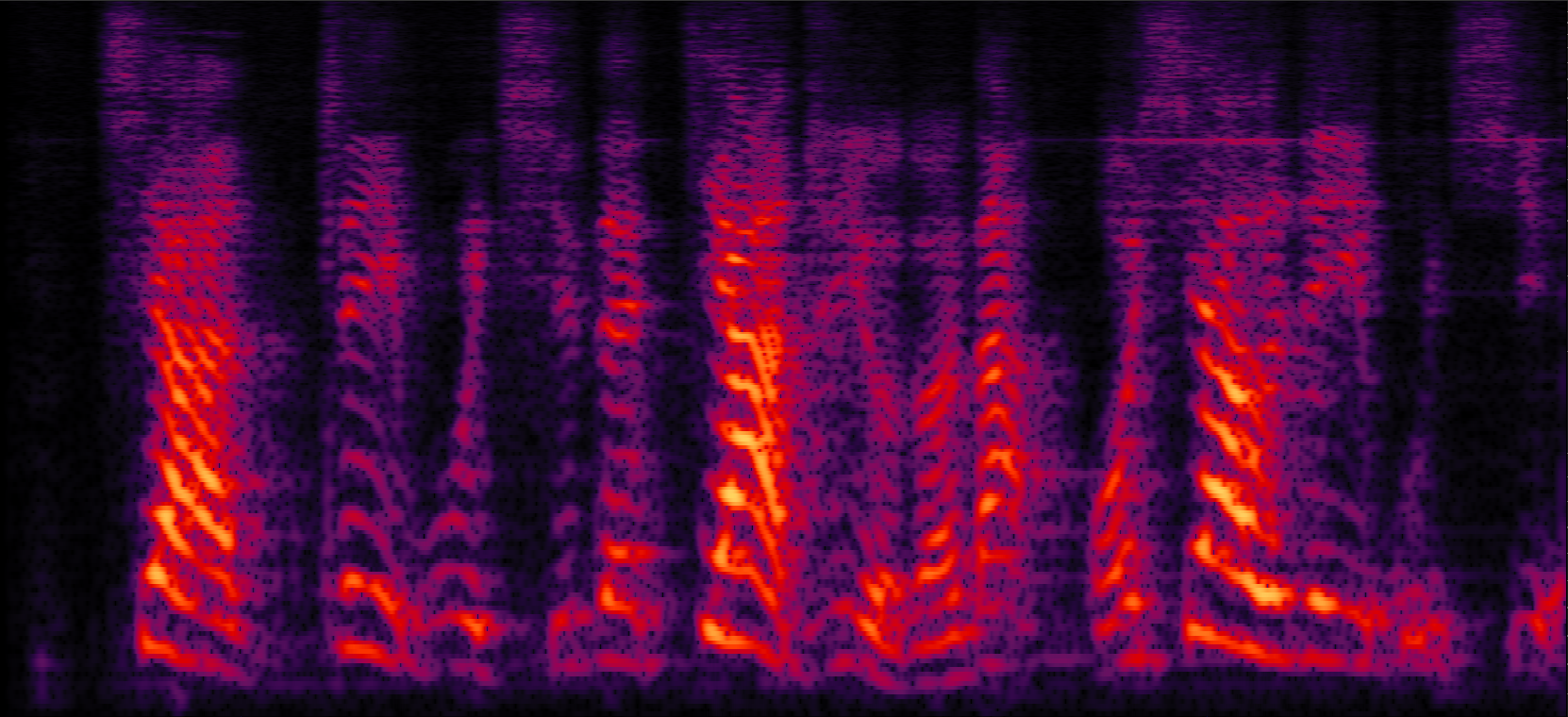

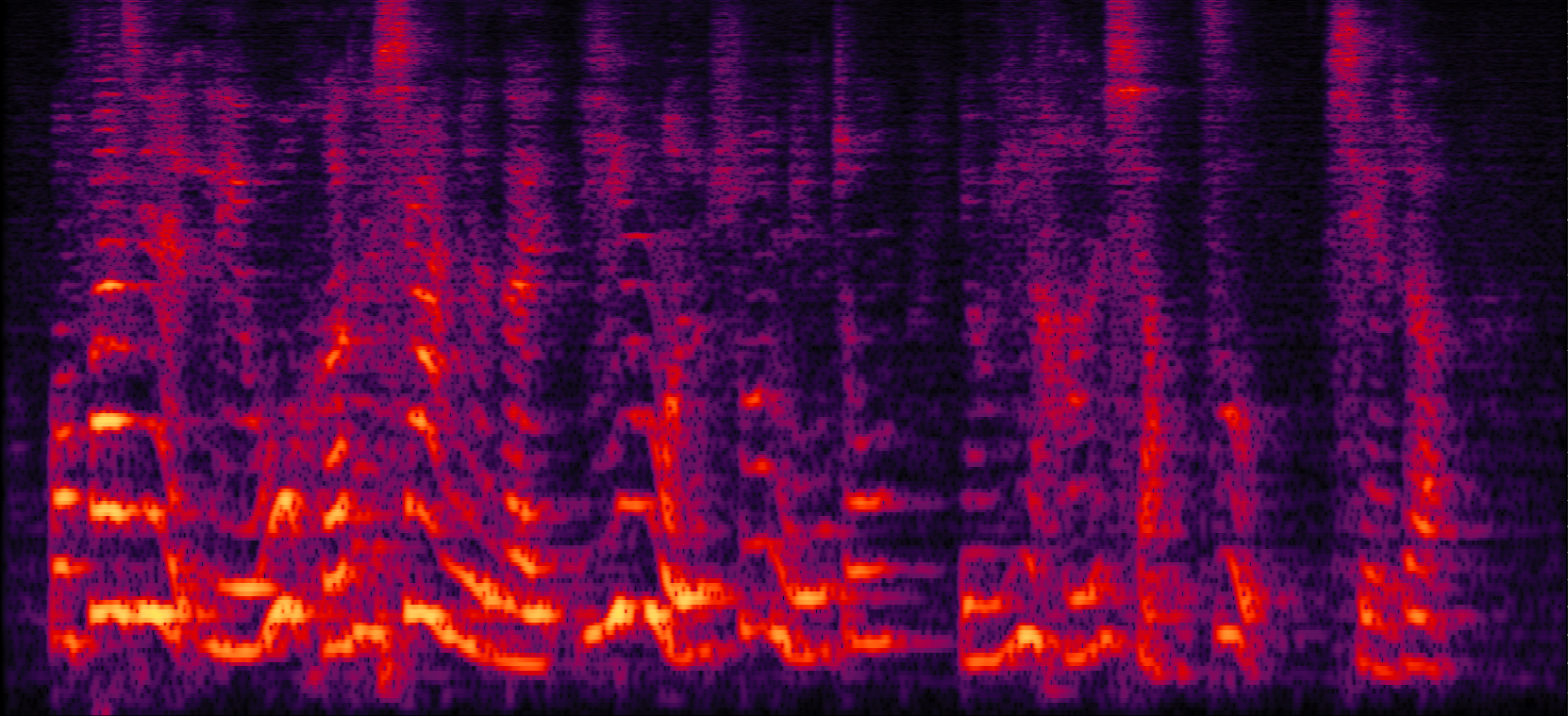

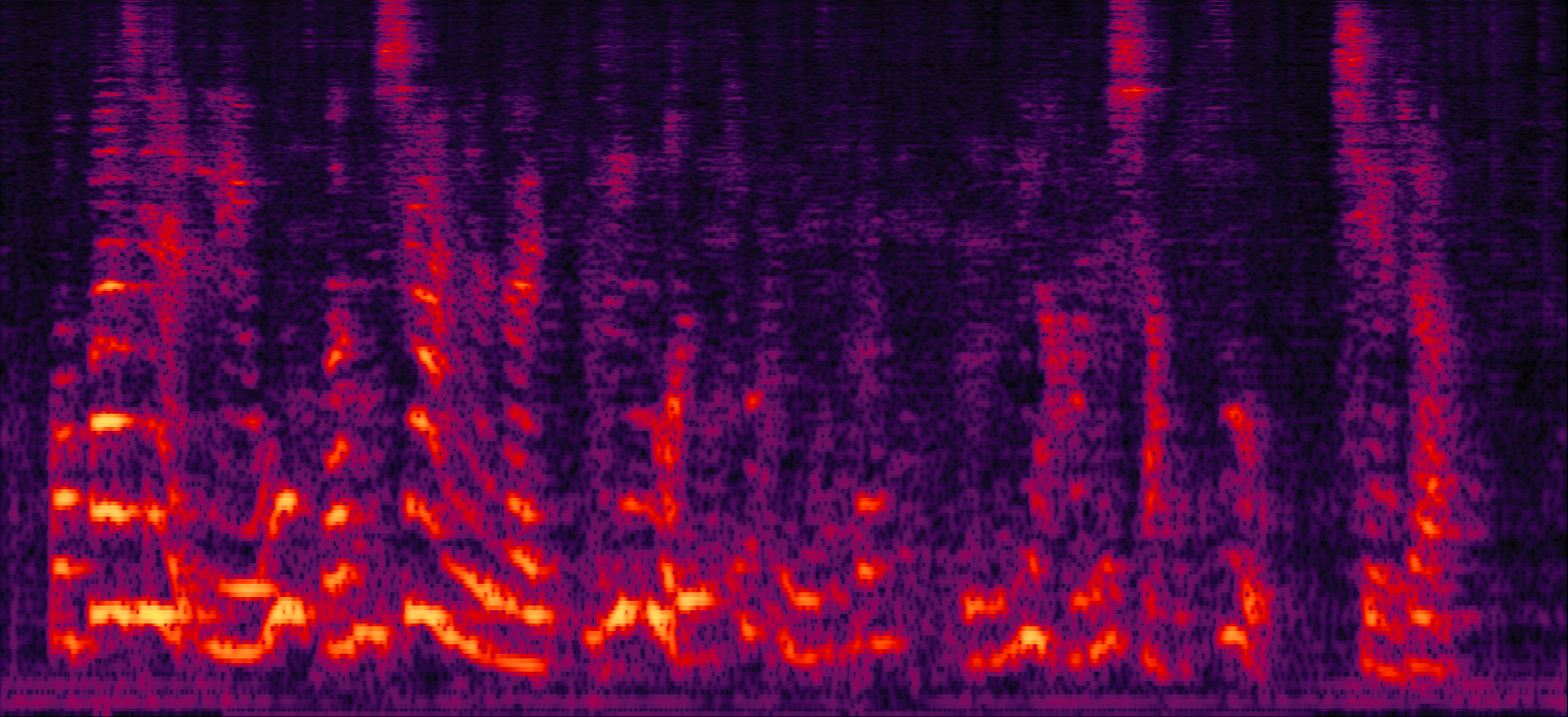

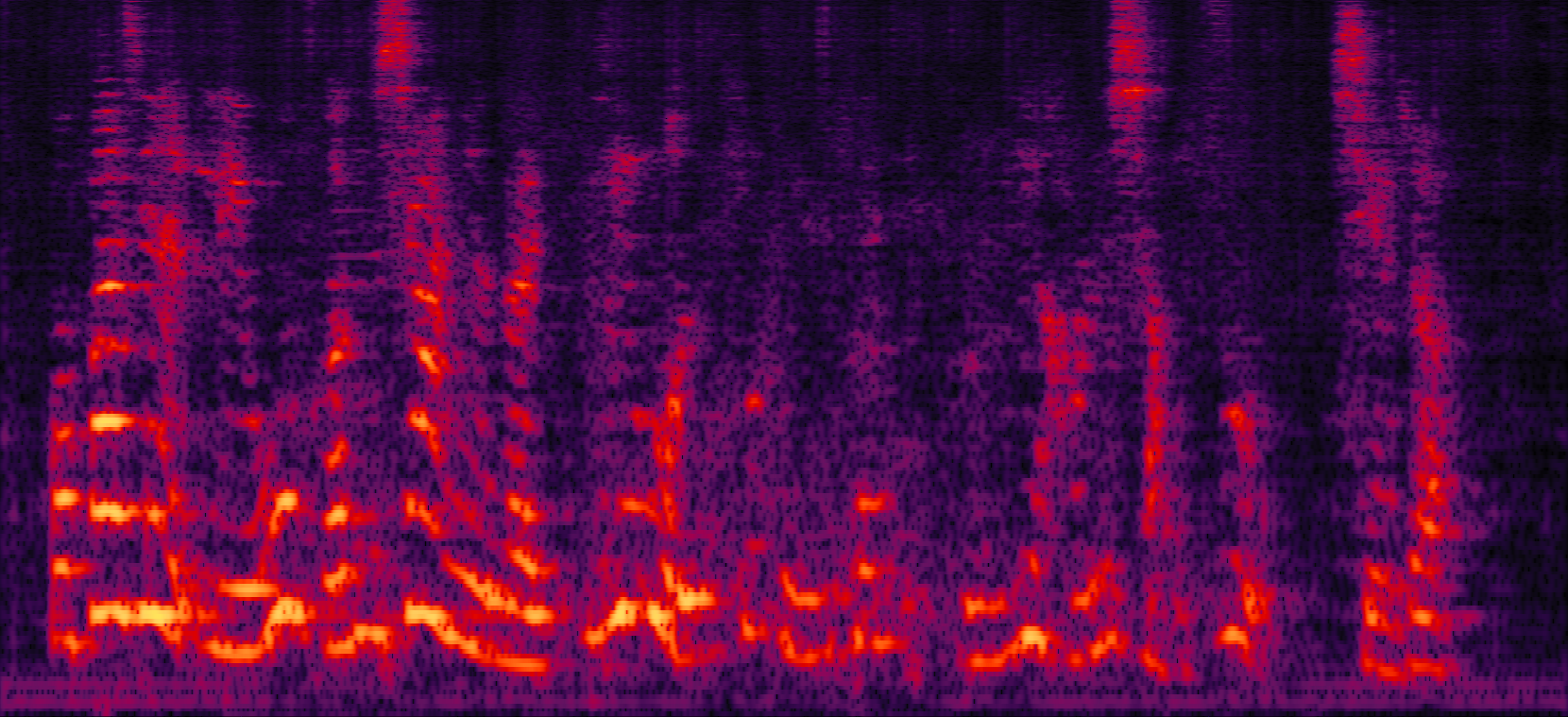

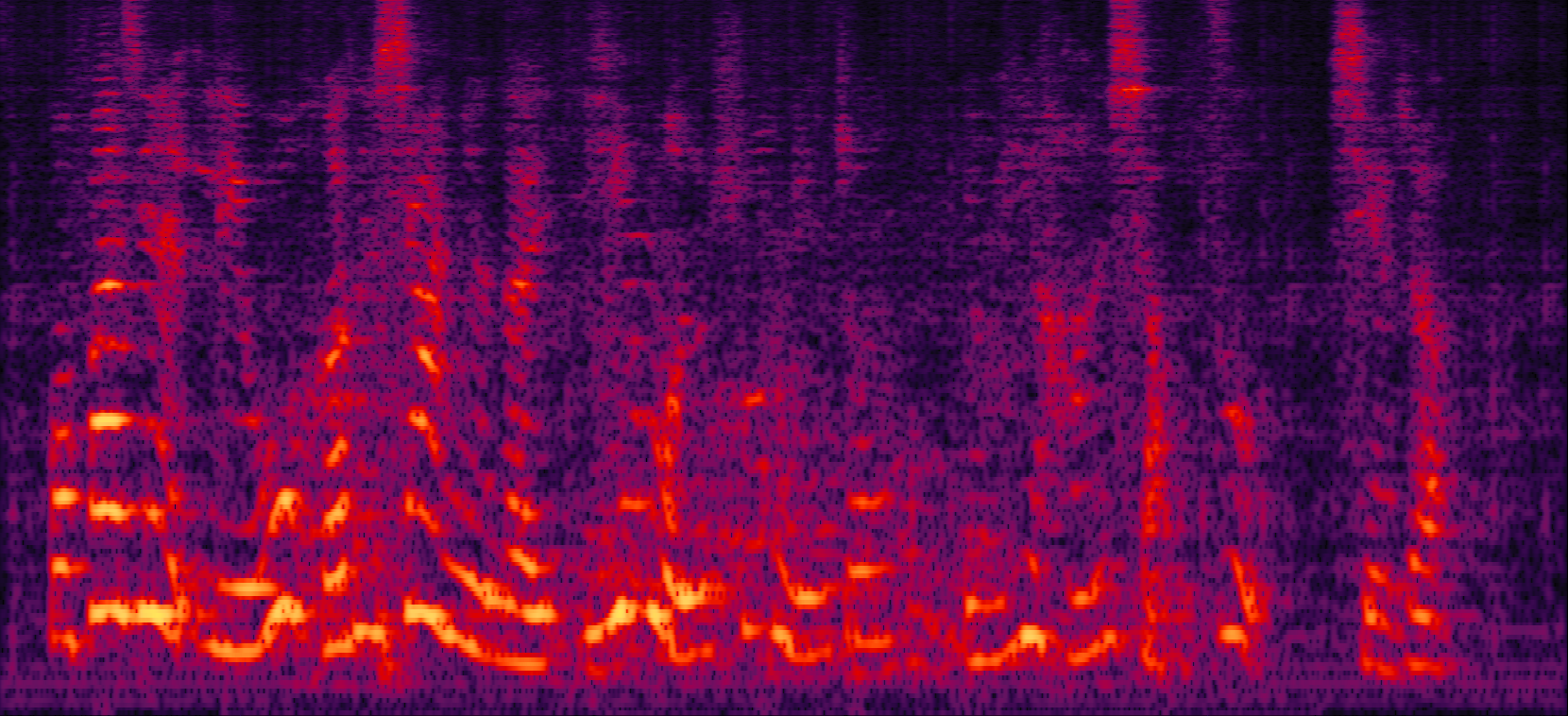

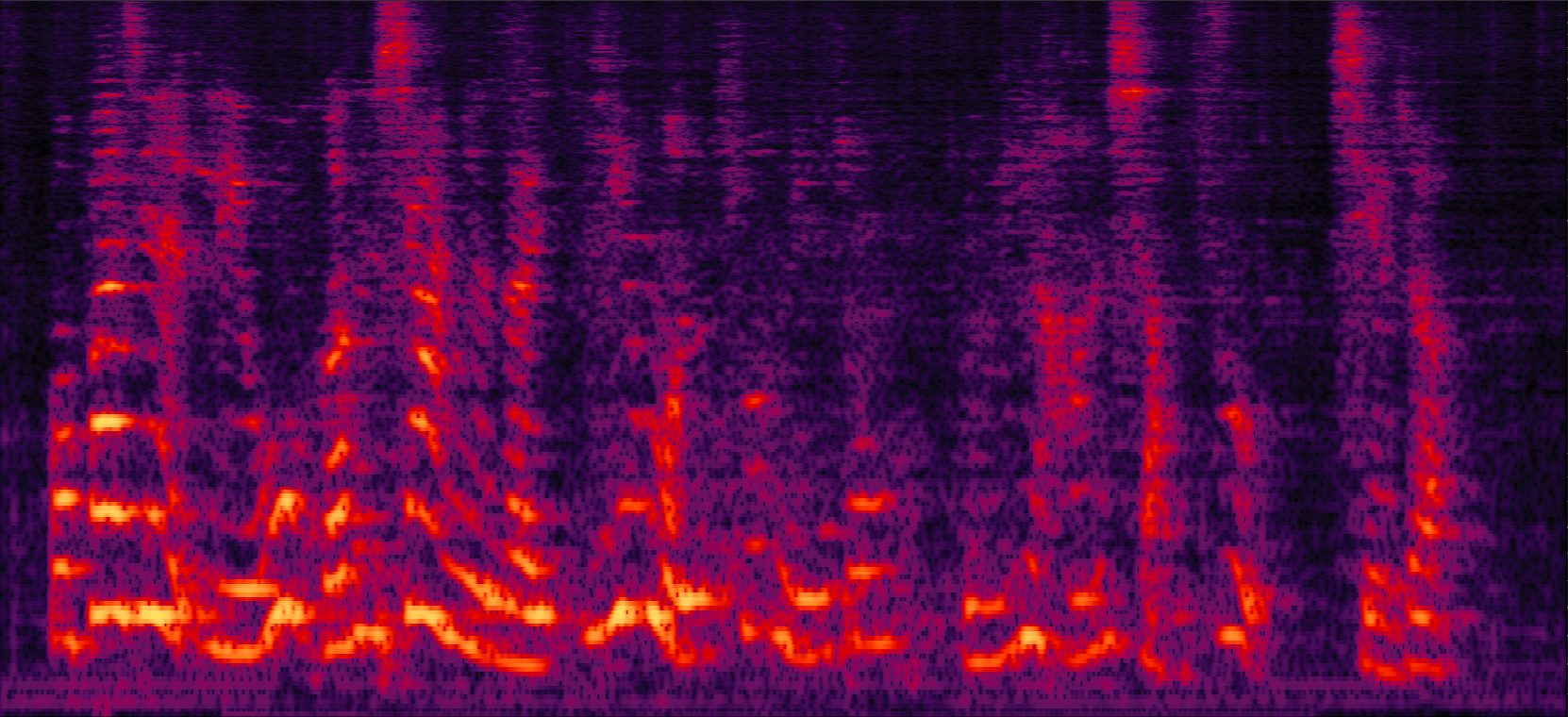

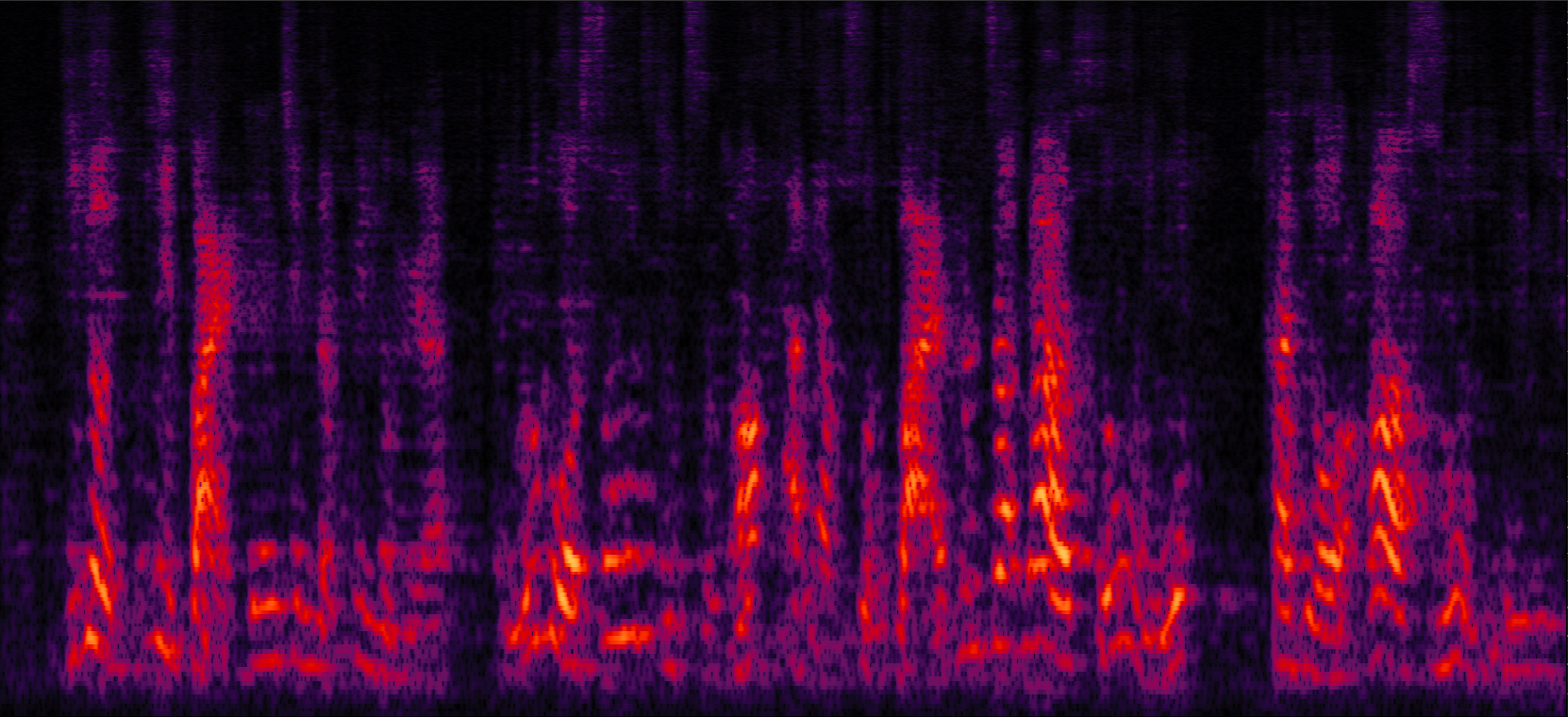

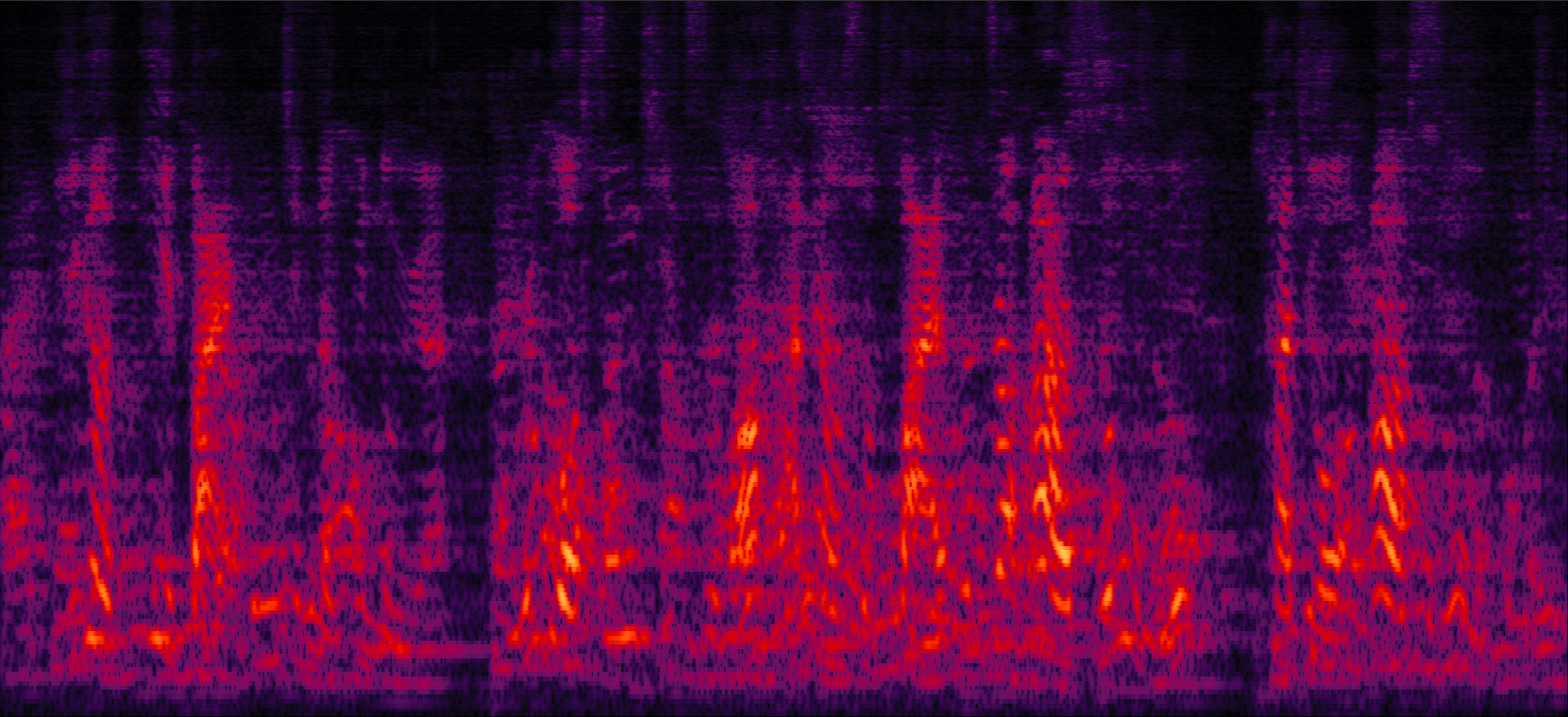

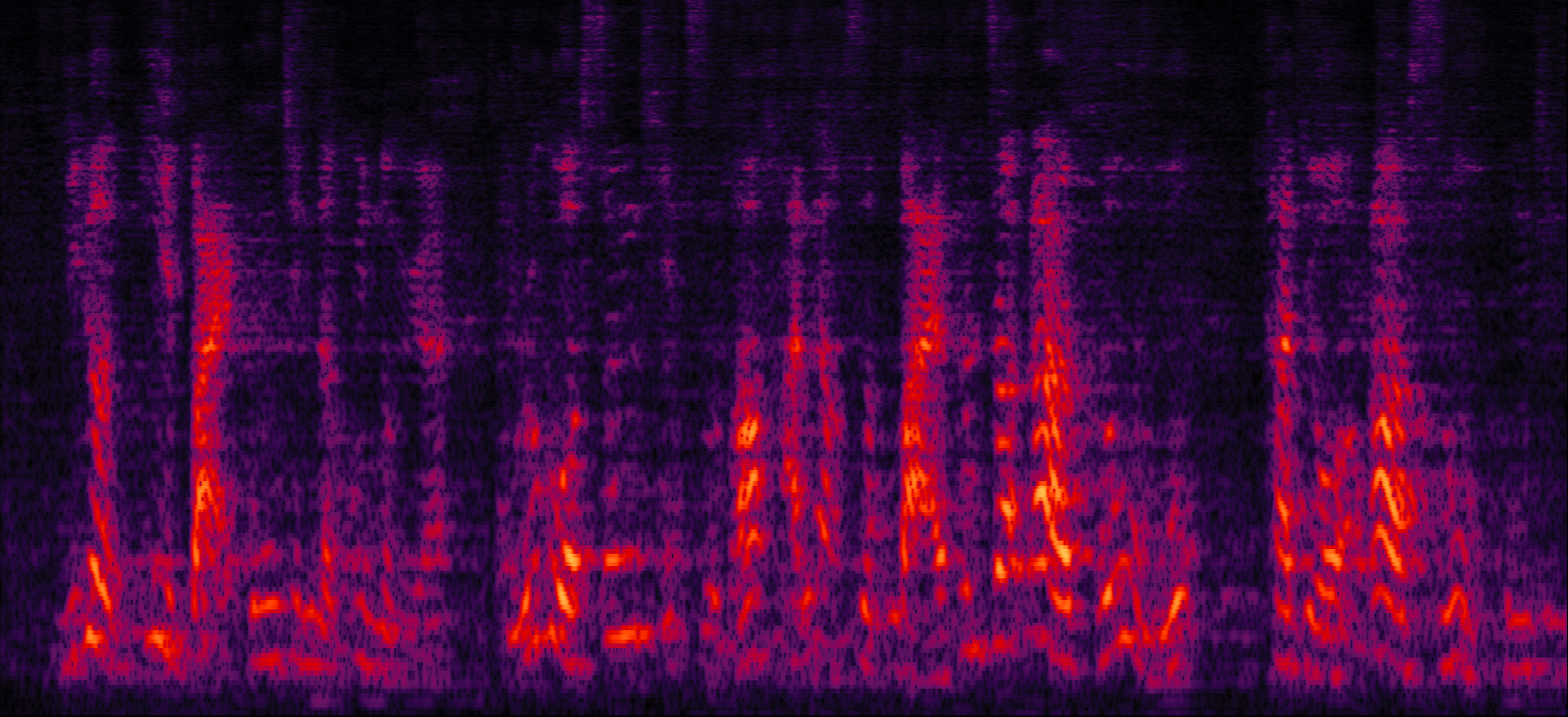

Our proposed MF and MC ADL-MVDR systems introduce hardly any distortions to the target speech while also eliminating the residual noise.

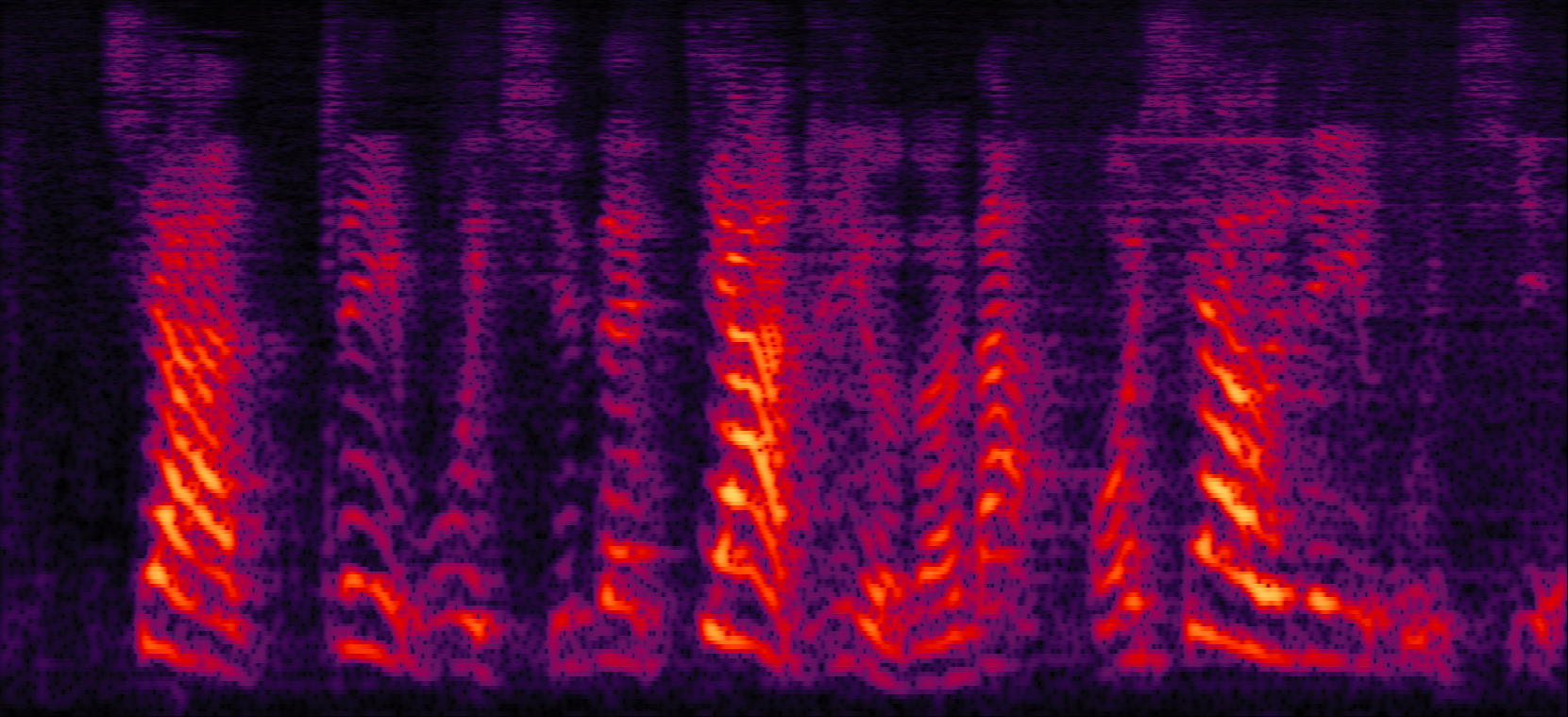

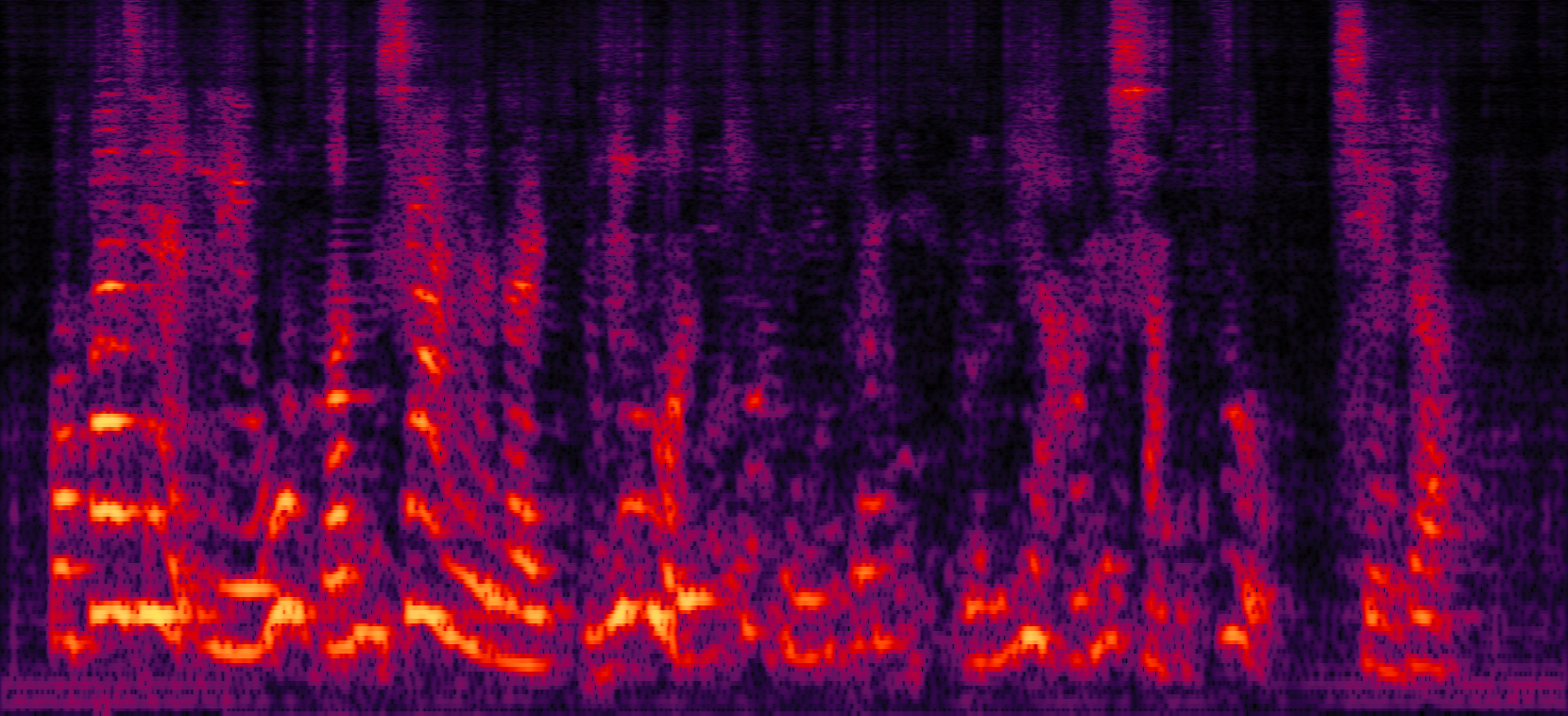

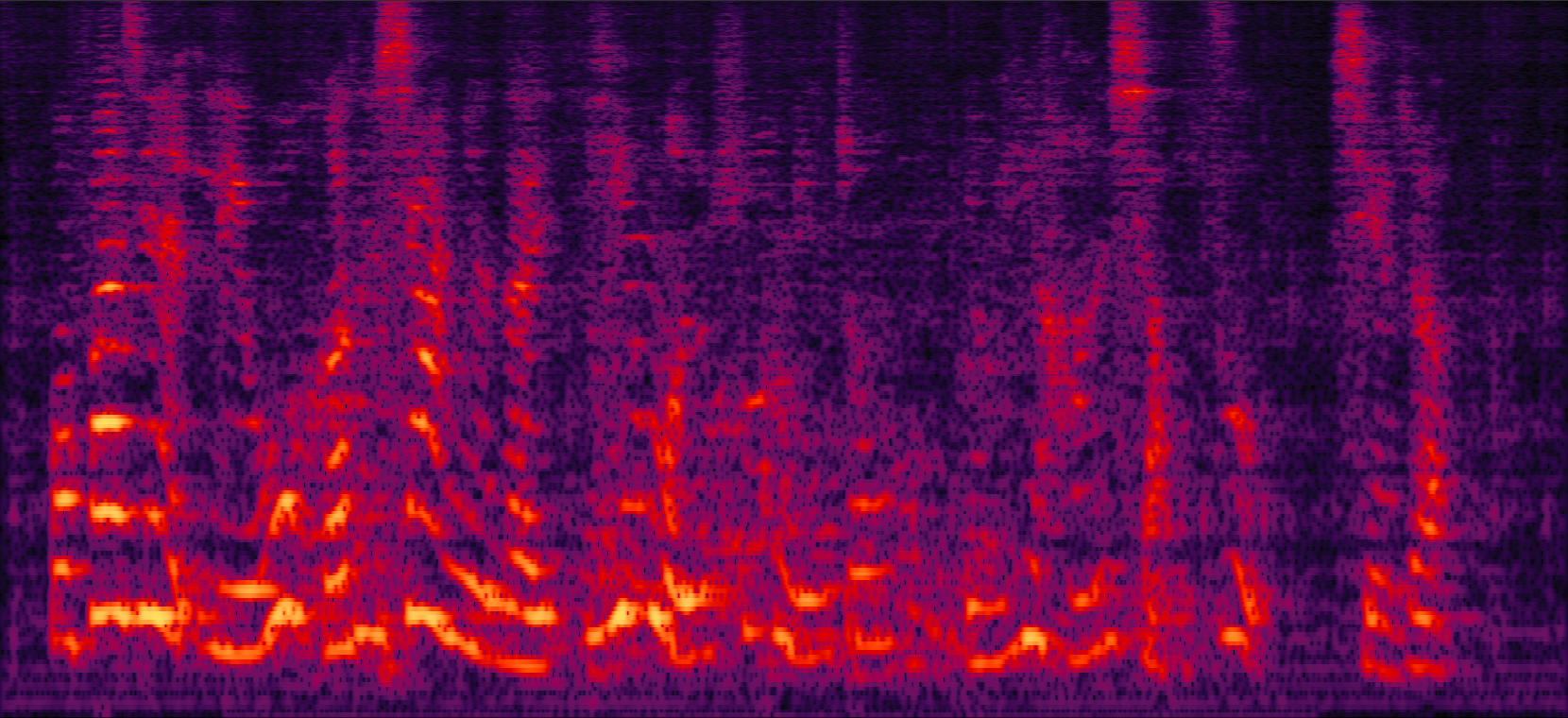

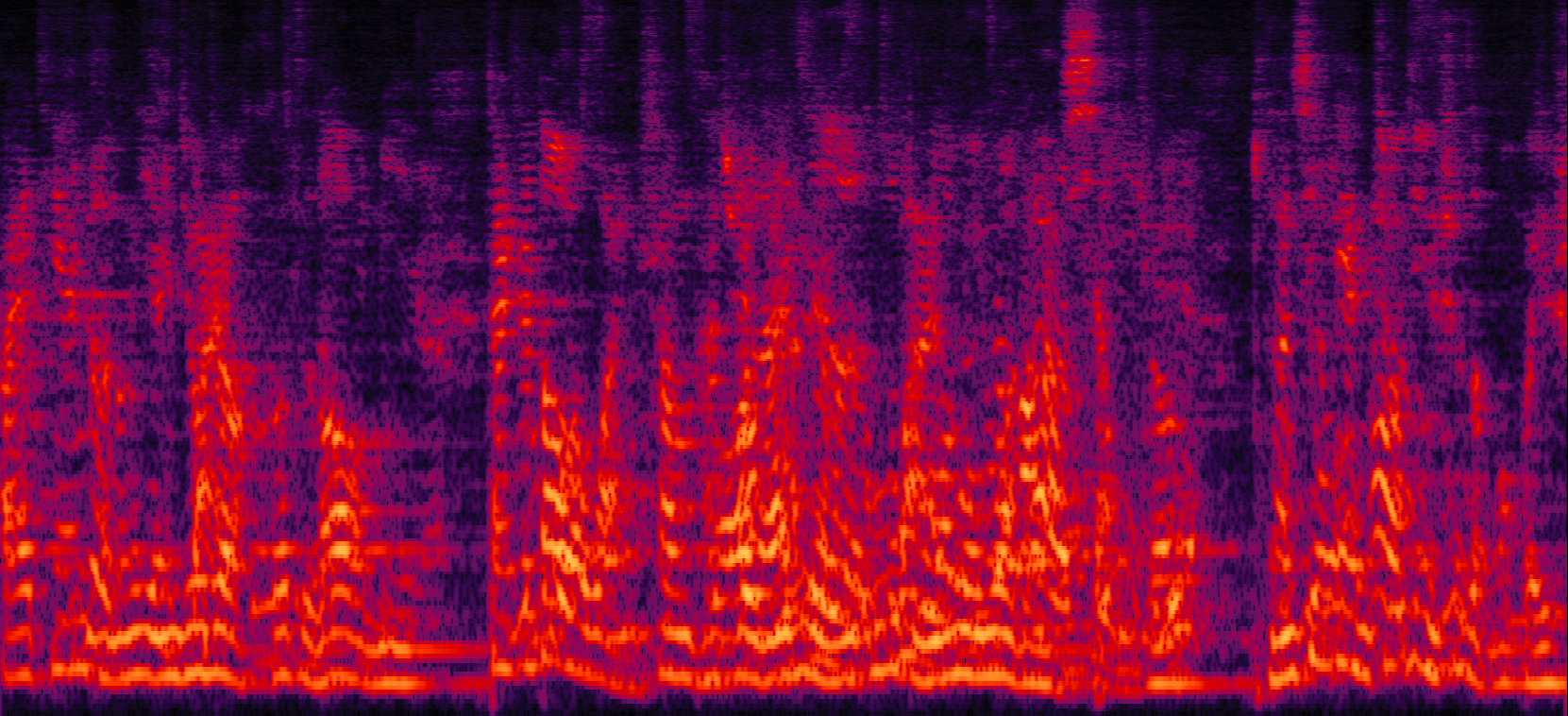

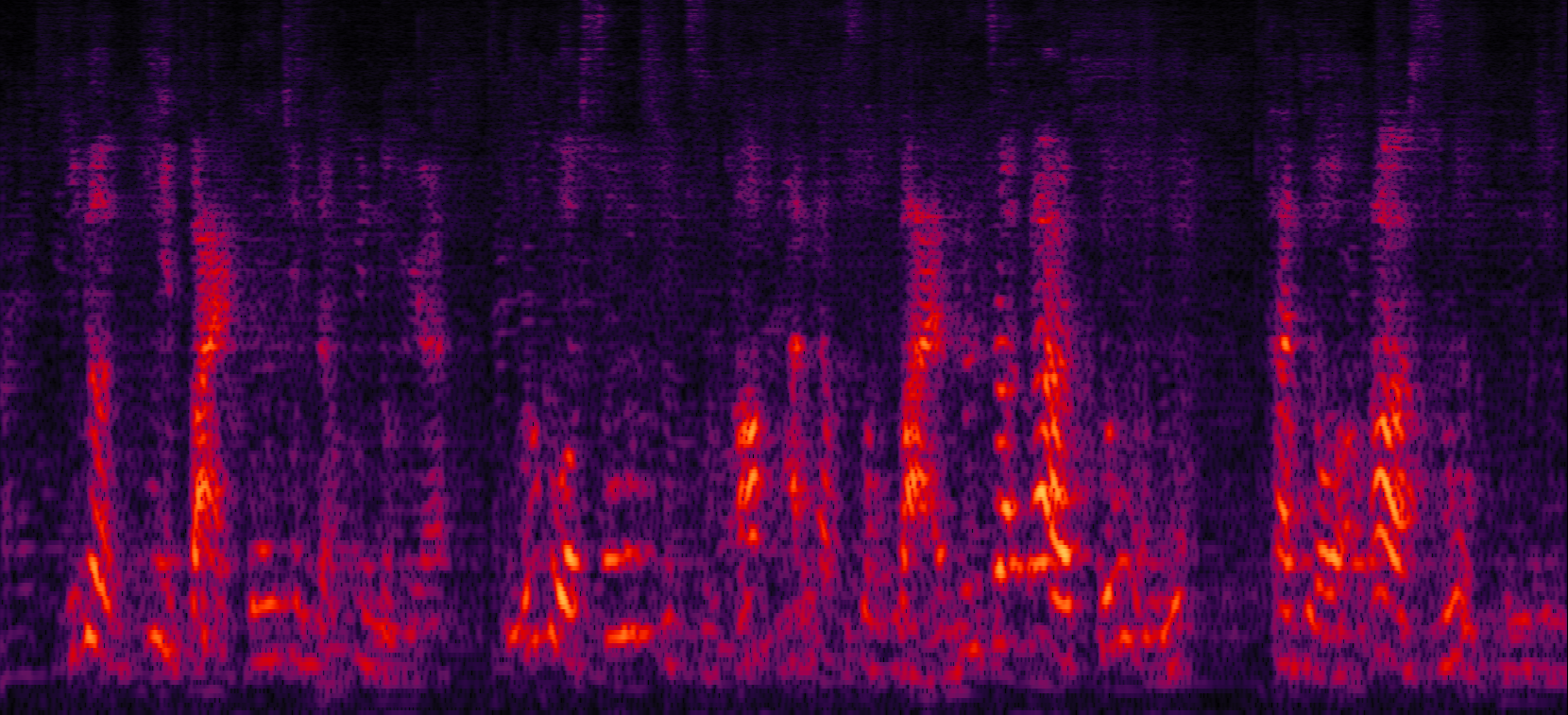

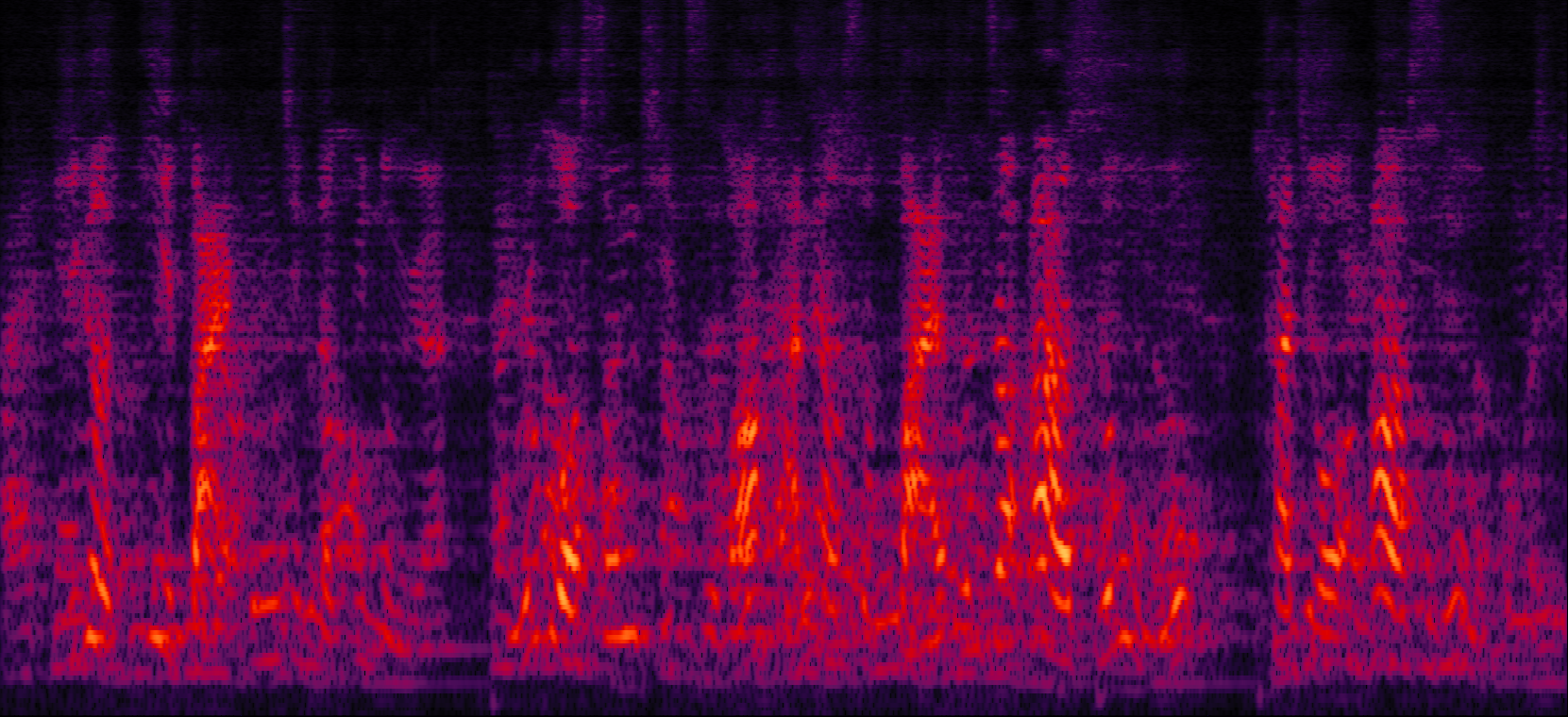

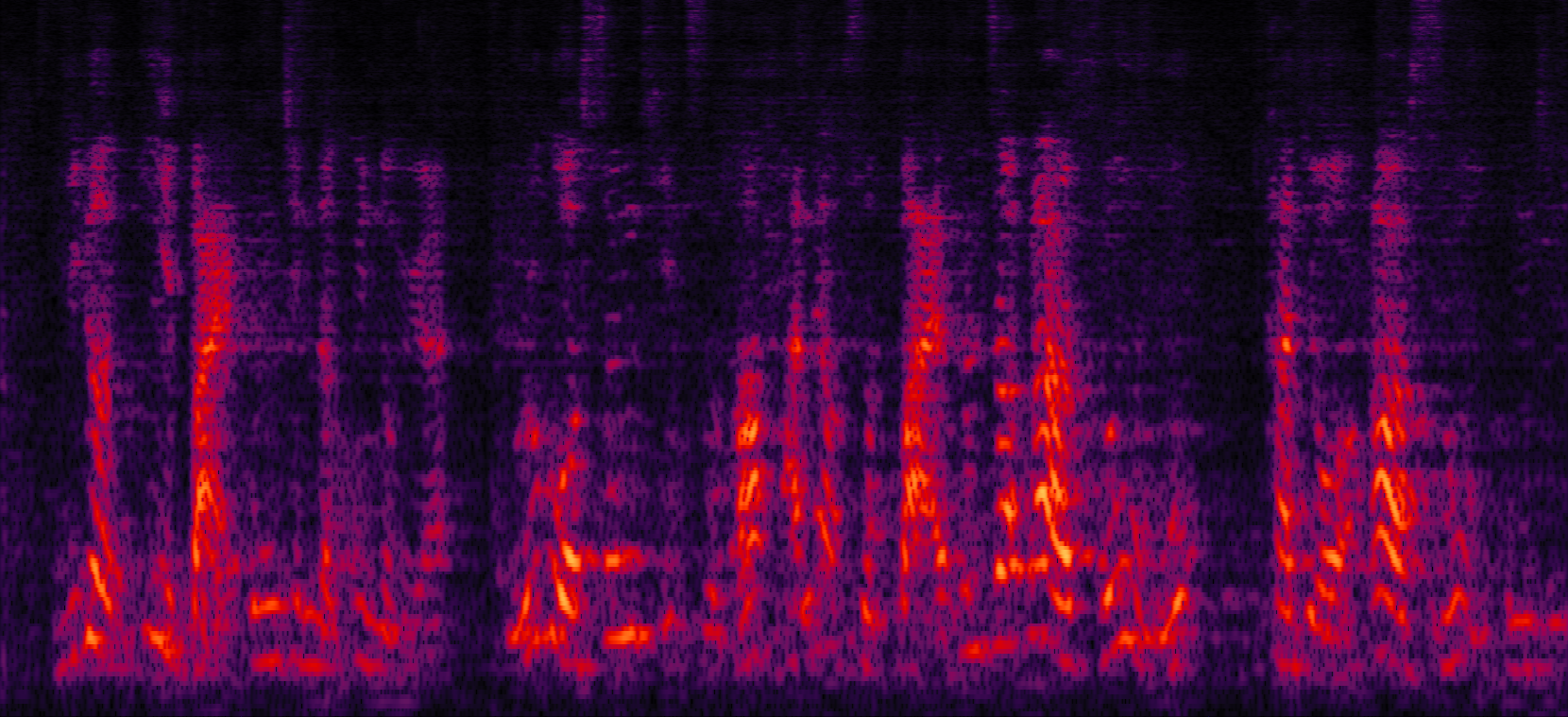

Our proposed MCMF ADL-MVDR systems can further utilize the spatio-temporal cross correlations to achieve even better performance.

Demo 1: Simulated 1-speaker noisy mixture for target speech separation [Sorry that all the demos are recorded in Mandarin Chinese.]

Purely NNs and our proposed MF ADL-MVDR systems:

| Mix (1 speaker + non-stationary additive noise) | Reverberant clean (reference) | NN with cRM | NN with cRF |

| Proposed MF (size-2) ADL-MVDR, MF information t-1 to t | Proposed MF (size-3) ADL-MVDR, MF information t-1 to t+1 | Proposed MF (size-5) ADL-MVDR, MF information t-2 to t+2 |

Conventional MC MVDR systems and our proposed MC ADL-MVDR systems:

| MVDR with cRM | MVDR with cRF | Multi-tap MVDR with cRM (2-tap: [t-1,t]) | Multi-tap MVDR with cRF (2-tap: [t-1,t]) |

| Proposed MC ADL-MVDR with cRF |

Our proposed MCMF ADL-MVDR systems:

| Proposed MCMF ADL-MVDR with cRF (3-channel 3-frame) | Proposed MCMF ADL-MVDR with cRF (9-channel 3-frame) |

Demo 2: Simulated 2-speaker noisy mixture for target speech separation separation

Purely NNs and our proposed MF ADL-MVDR systems:

| Mix (2 speakers + non-stationary additive noise) | Reverberant clean (reference) | NN with cRM | NN with cRF |

| Proposed MF (size-2) ADL-MVDR, MF information t-1 to t | Proposed MF (size-3) ADL-MVDR, MF information t-1 to t+1 | Proposed MF (size-5) ADL-MVDR, MF information t-2 to t+2 |

Conventional MC MVDR systems and our proposed MC ADL-MVDR systems:

| MVDR with cRM | MVDR with cRF | Multi-tap MVDR with cRM (2-tap: [t-1,t]) | Multi-tap MVDR with cRF (2-tap: [t-1,t]) |

| Proposed MC ADL-MVDR with cRF |

Our proposed MCMF ADL-MVDR systems:

| Proposed MCMF ADL-MVDR with cRF (3-channel 3-frame) | Proposed MCMF ADL-MVDR with cRF (9-channel 3-frame) |

Demo 3: Simulated 3-speaker noisy mixture for target speech separation (waveforms aligned with the spectrograms shown in Fig. 2 of the paper)

Purely NNs and our proposed MF ADL-MVDR systems:

| Mix (3 speakers + non-stationary additive noise) | Reverberant clean (reference) | NN with cRM | NN with cRF |

| Proposed MF (size-2) ADL-MVDR, MF information t-1 to t | Proposed MF (size-3) ADL-MVDR, MF information t-1 to t+1 | Proposed MF (size-5) ADL-MVDR, MF information t-2 to t+2 |

Conventional MC MVDR systems and our proposed MC ADL-MVDR systems:

| MVDR with cRM | MVDR with cRF | Multi-tap MVDR with cRM (2-tap: [t-1,t]) | Multi-tap MVDR with cRF (2-tap: [t-1,t]) |

| Proposed MC ADL-MVDR with cRF |

Our proposed MCMF ADL-MVDR systems:

| Proposed MCMF ADL-MVDR with cRF (3-channel 3-frame) | Proposed MCMF ADL-MVDR with cRF (9-channel 3-frame) |

Real-world scenario: far-field recording and testing:

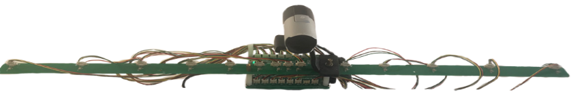

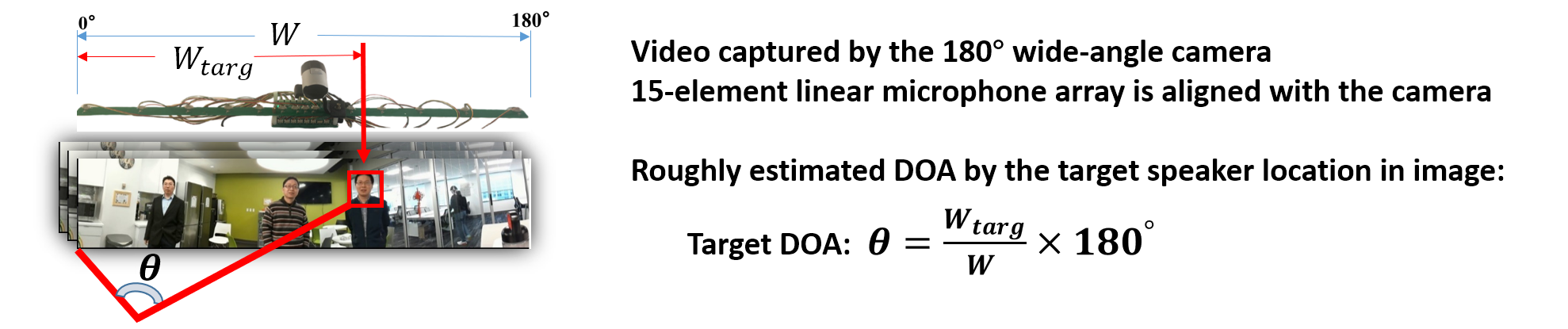

Real-world recording hardware device: 15-element non-uniform linear microphone array and 180 degree wide-angle camera

For the real-world videos, the 180-degree wide-angle camera is calibrated and synchronized with the 15-channel mic array. We estimate the rough DOA of the target speaker according to the location of the target speaker in the whole camera view [5]. Face detection is applied to track the target speaker's DOA.

Demo 4: Real-world far-field recording and testing 1:

| Real-world scenario: far-field two-speaker mixture recorded by the camera and microphone array | Real-world scenario: separated female voice by our previously reported multi-tap MVDR (3-tap) method [3] (high level of residual noise) |

| Real-world scenario: separated female voice by the proposed MC ADL-MVDR system. | Real-world scenario: separated female voice by the proposed MCMF (9-channel 3-frame) ADL-MVDR system (face detected in the red rectangle, used for DOA estimation). |

Demo 5: Real-world far-field recording and testing 2:

| Real-world scenario: far-field two-speaker mixture recorded by the camera and microphone array | Real-world scenario: separated male voice by our previously reported multi-tap MVDR (3-tap) method [3] (high level of residual noise) |

| Real-world scenario: separated male voice by the proposed MC ADL-MVDR system | Real-world scenario: separated male voice by the proposed MCMF (9-channel 3-frame) ADL-MVDR system (face detected in the red rectangle, used for DOA estimation). |

References:

[1] Du, Jun, et al. "Robust speech recognition with speech enhanced deep neural networks." Interspeech2014

[2] Xiao, Xiong, et al. "On time-frequency mask estimation for MVDR beamforming with application in robust speech recognition." ICASSP2017

[3] Xu, Yong, et al. "Neural Spatio-Temporal Beamformer for Target Speech Separation." accepted to Interspeech2020.

[4] Zhang, Zhuohuang, et al. "ADL-MVDR: All deep learning MVDR beamformer for target speech separation." arXiv preprint arXiv:2008.06994 (2020).

[5] Tan, Ke, et al. "Audio-visual speech separation and dereverberation with a two-stage multimodal network." IEEE Journal of Selected Topics in Signal Processing (2020).

[6] Luo, Yi, and Nima Mesgarani. "Conv-tasnet: Surpassing ideal time–frequency magnitude masking for speech separation." IEEE/ACM transactions on audio, speech, and language processing 27.8 (2019): 1256-1266.